Host a website with AWS S3 and CloudFront

In this tutorial, you will learn how to host a website with AWS services: Amazon S3 and Amazon CloudFront.

Introduction

AWS is a complete cloud platform offering hundreds of services. You can build very complex architectures for your applications with it. However, it’s not only for big projects. If your requirements are straightforward, such as hosting a small static landing page, you can also achieve them quickly. You will build one in the tutorial and can see how easily you can do it.

Prerequisites

- AWS Account

What is a Static Website?

A static website comprises HTML, CSS, JavaScript and media assets (such as images, audio, video etc.). The main characteristic of a static website is it runs on the client-side, meaning the user’s browser. These days you can develop complicated web applications using frameworks such as Angular, React and Vue. However, they are still JavaScript-based frameworks/libraries, so they still run on the browser; hence they can be distributed as static websites.

Main advantages of static websites:

- ✅ Performance: Once it’s downloaded, everything runs on the browser.

- ✅ Security: You can distribute content only without login pages

- ✅ Cost: It’s generally cheaper to host static websites

- ✅ Maintenance: You don’t need to maintain your servers with managed services such as Amazon S3.

Without further ado, let’s get going with the implementation.

Part 1: Host a Single HTML website with Amazon S3

In this part, you will create a new bucket, set up permissions, upload your HTML file and test your website live using the S3 website endpoint URL.

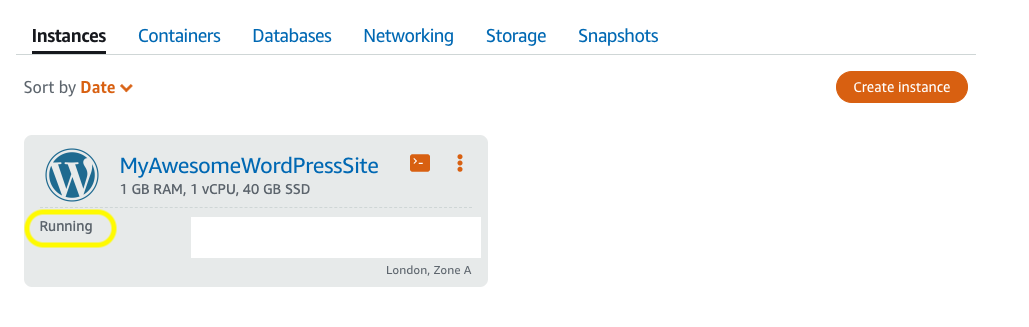

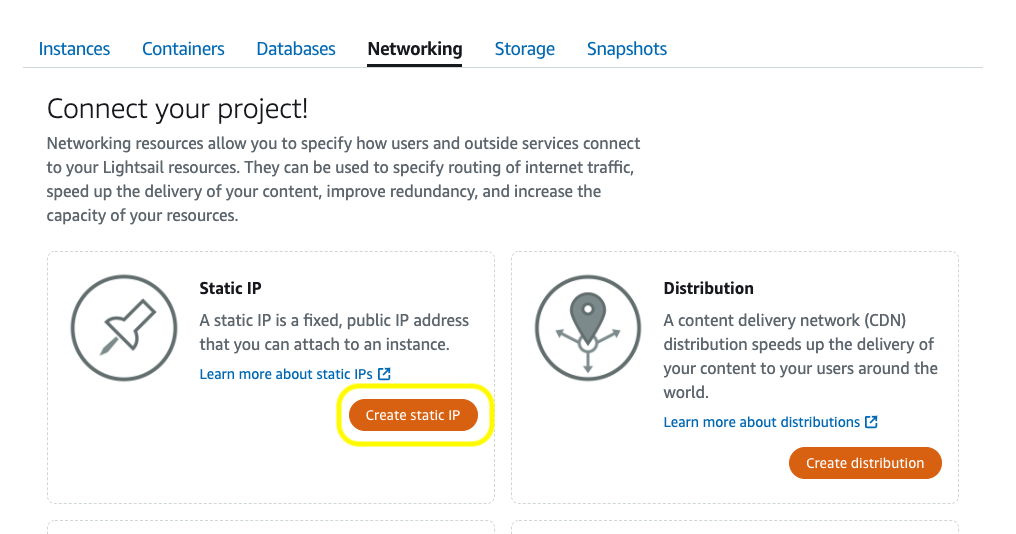

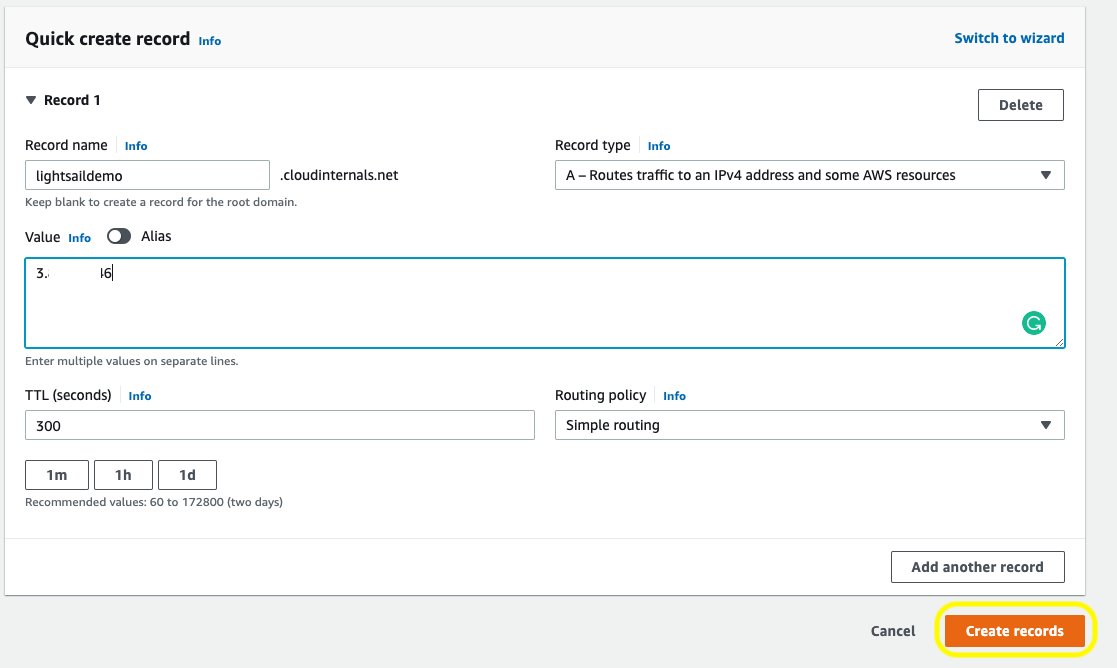

Step 1: Open the Amazon S3 dashboard and click the Create bucket button

Step2: Create a new bucket

Naming your bucket is important if you are planning to use S3 endpoints. S3 provides two different types of website endpoints depending on the region. The two notations are:

- s3-website dash (-) Region ‐ http://bucket-name.s3-website-Region.amazonaws.com

- s3-website dot (.) Region ‐ http://bucket-name.s3-website.Region.amazonaws.com

You can see your bucket name will be in the name of your URL, so you might want to choose carefully. Also, you cannot rename a bucket after you’ve created it.

There are quite a few rules for bucket names. You can find the complete list here: Bucket naming rules.

If you have a domain name, then use that as your bucket name. Later you will see how to create a DNS entry to redirect your domain to the S3 bucket.

With regards to region selection, pick the geographical location that is closest to you and your audience. This approach helps with latency, costs and also some regulatory restrictions.

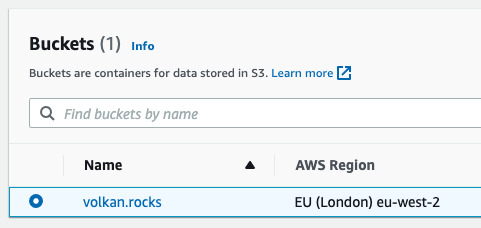

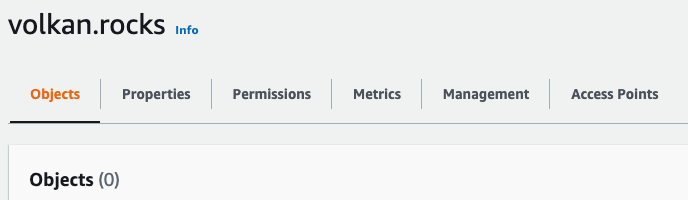

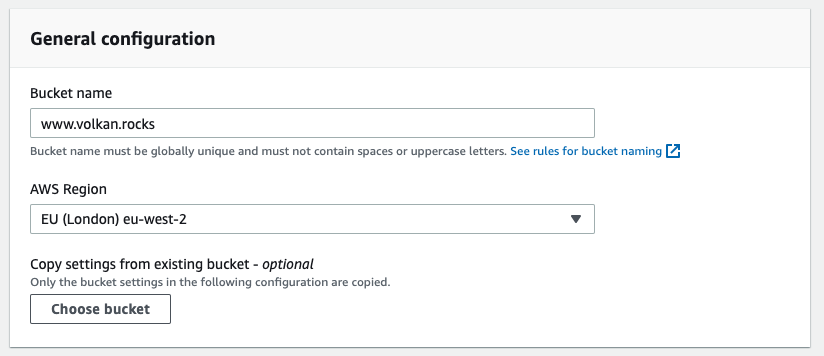

In this example, I will create my bucket in the London region with my domain’s name volkan.rocks.

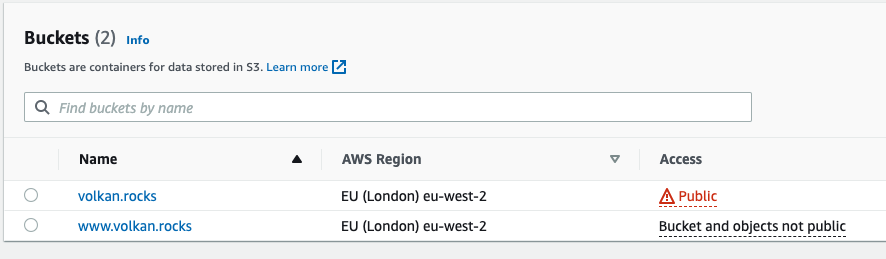

After your bucket has been created, you should be redirected to the S3 dashboard and see your new bucket.

Step 3: Click the bucket name and switch to the Properties tab.

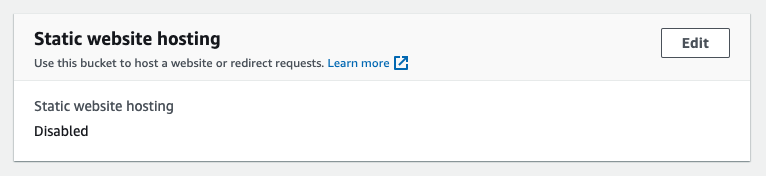

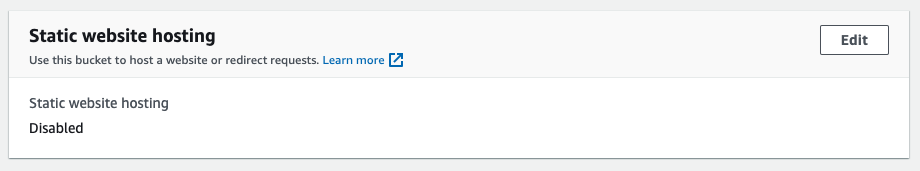

Step 4: Scroll to the bottom of the page and click the Edit button in the Static website hosting section.

Step 5: Enable static website hosting

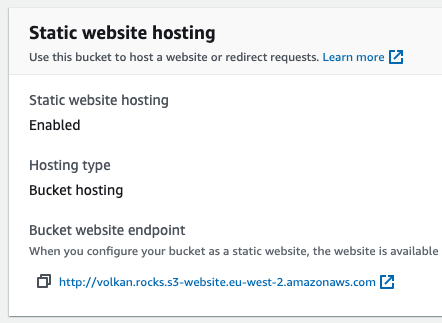

In the static website hosting properties, select Enable. You should now see all the other options.

Update the Index document value to index.html.

Accept the defaults for the rest and click the Save changes button at the bottom of the page.

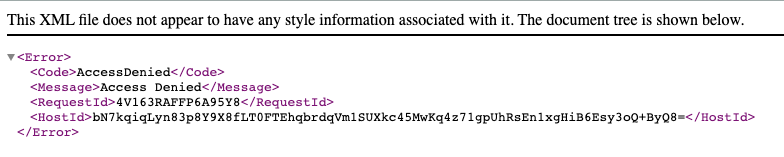

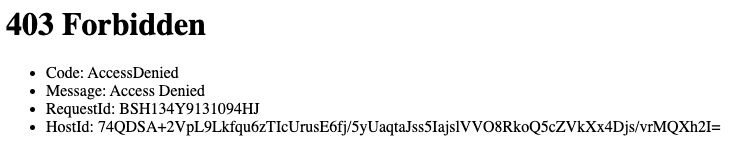

At this point, it’s hard to resist the urge to click the button to access your website. Since you haven’t uploaded an index document yet if you click on the click, you will see an error like this:

Don’t worry; you will fix it in the following steps.

Step 6: Create an index.html document

Create a file named index.html using your IDE or any text editor. Paste the following HTML code into the file:

<html>

<body>

<h1>My website is live!</h1>

</body>

</html>

Step 7: Upload the index.html file to the bucket

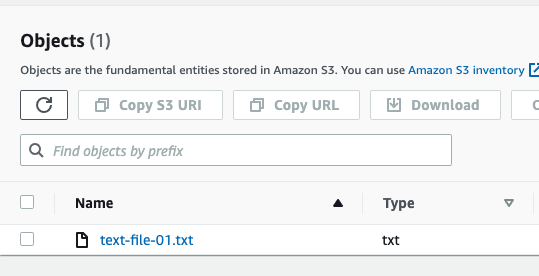

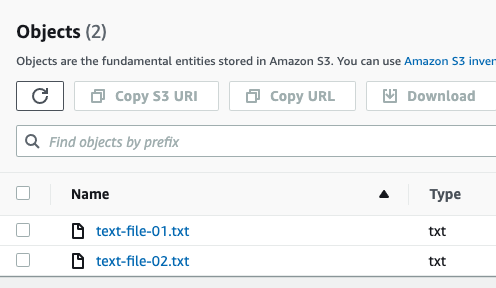

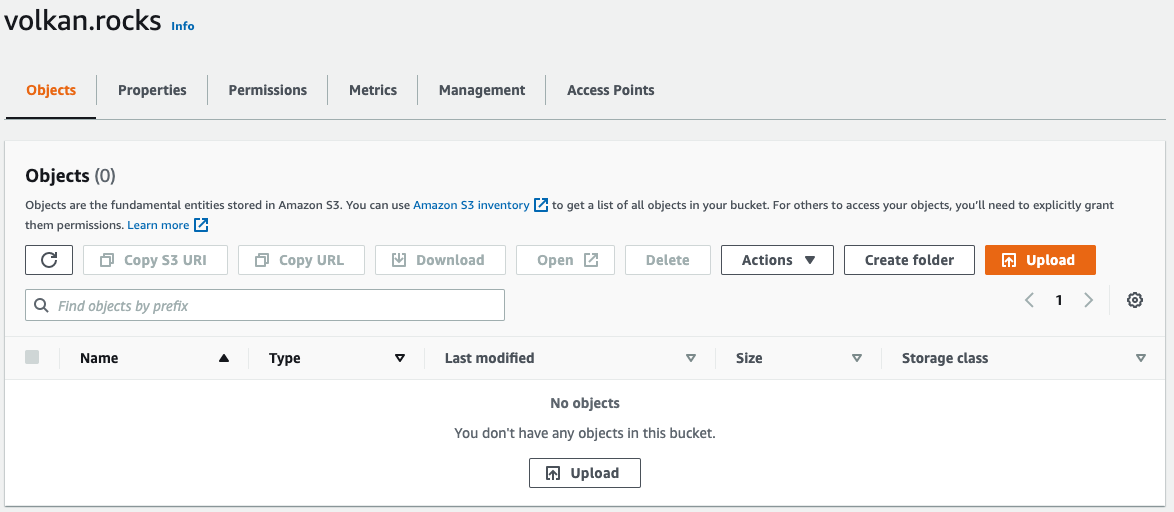

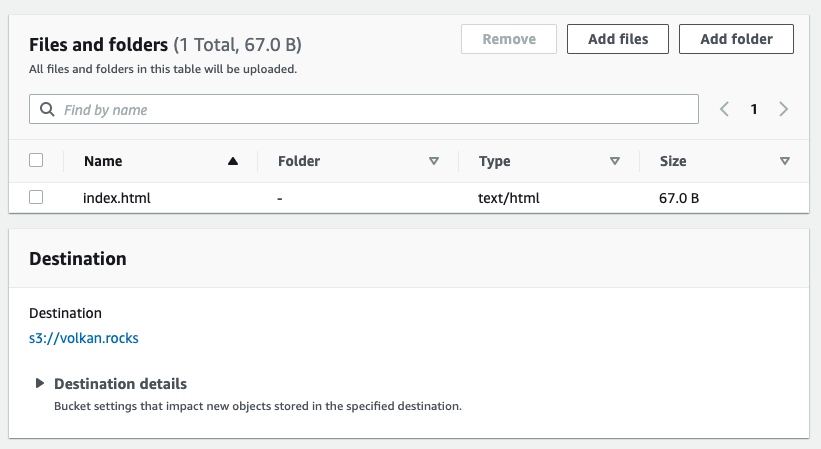

On your bucket page, switch to the Objects tab and click the Upload button.

You can drag and drop the file or click Add files button and browse your file system. Either way, once you’ve selected the file, you should see something like this:

Click the Upload button at the bottom to transfer the file to the S3 bucket.

Once you’ve seen the Upload succeeded message on the screen, you can click on the Close button.

Step 8: Update the bucket permissions to enable public access

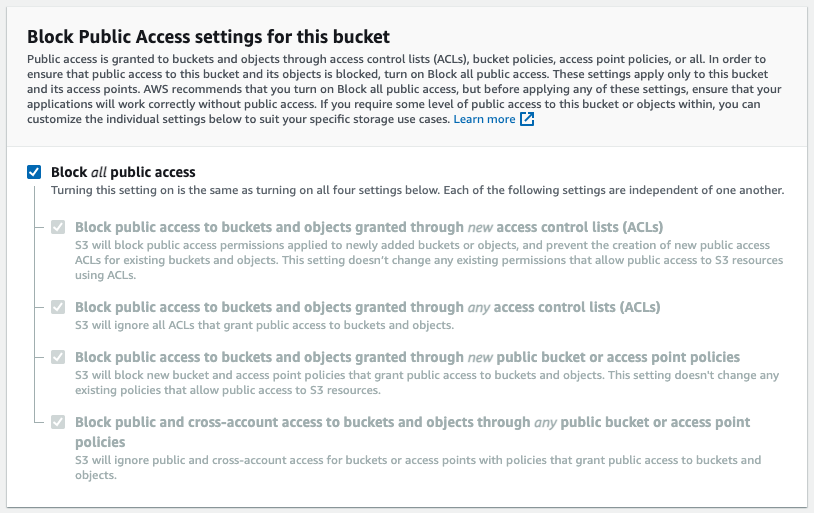

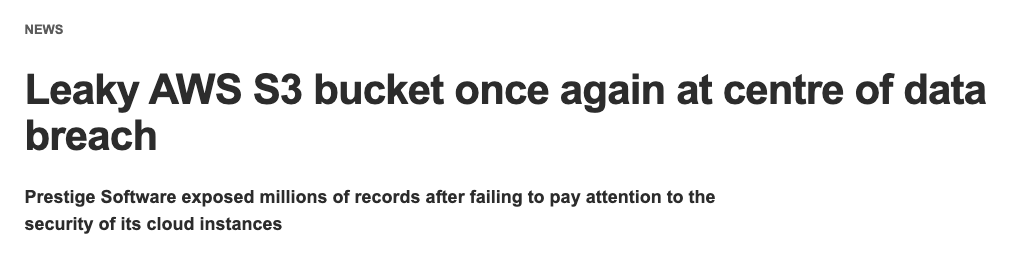

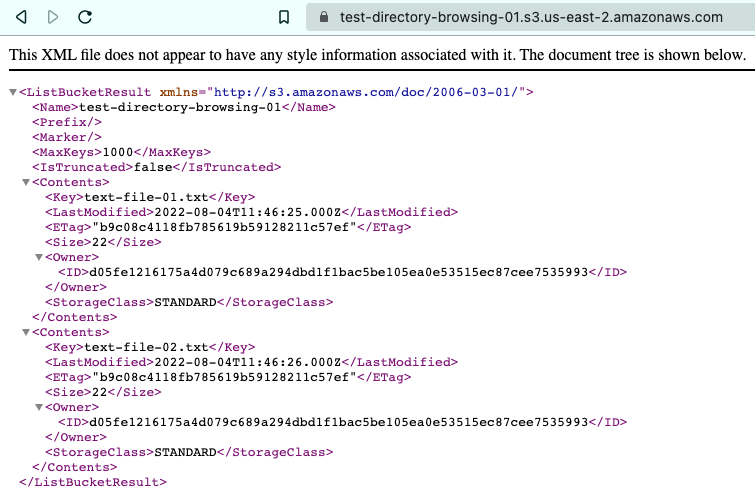

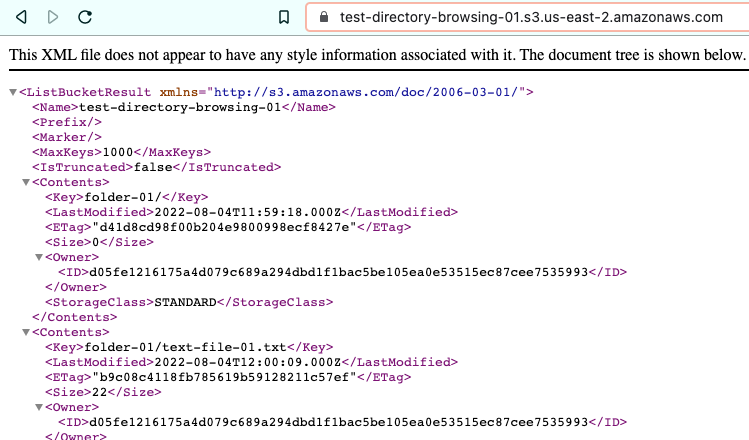

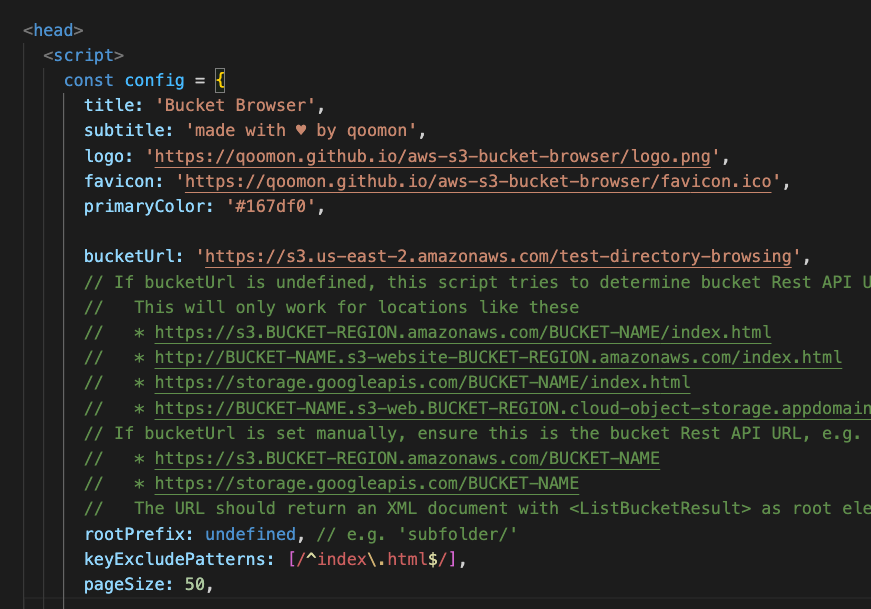

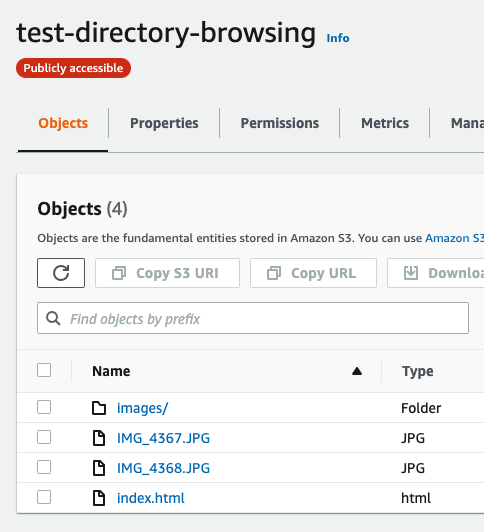

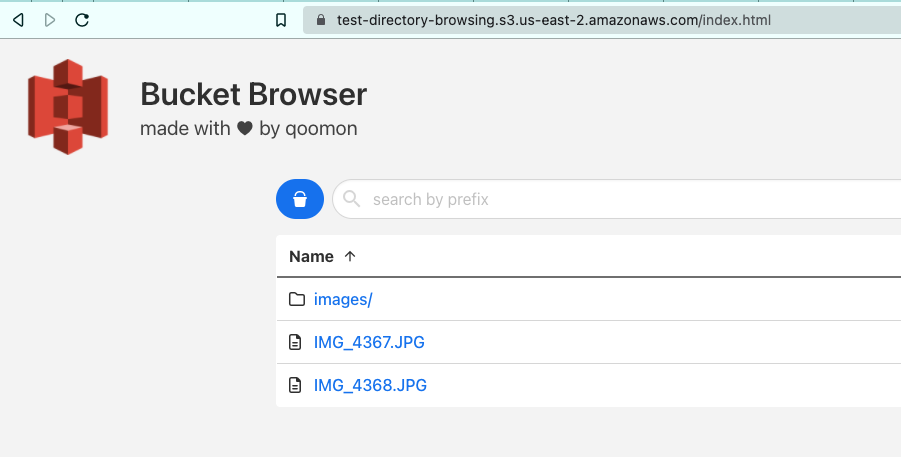

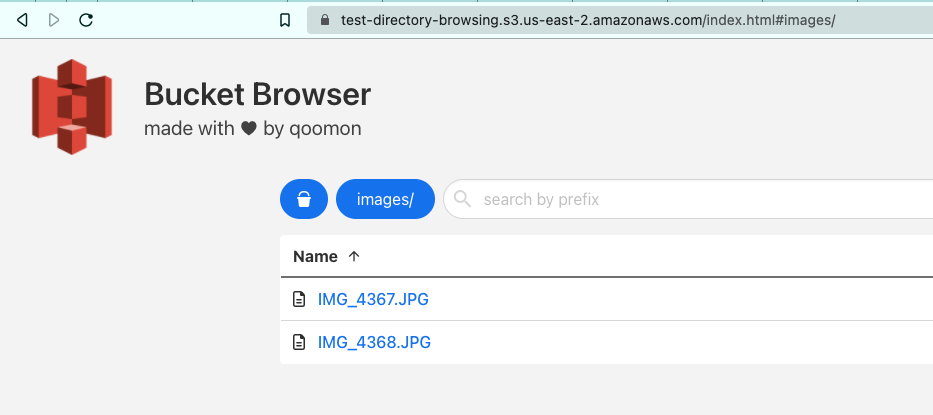

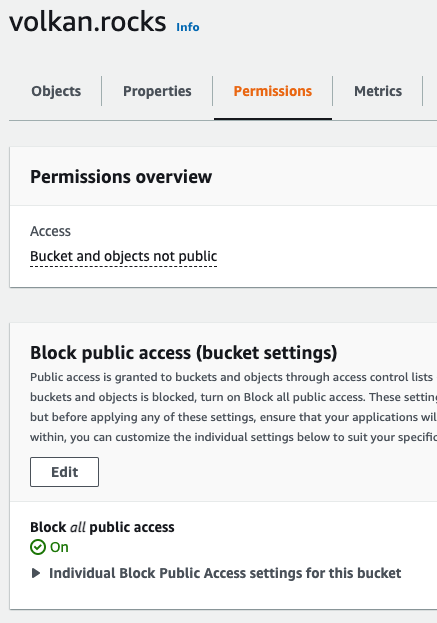

By default, all public access to the bucket and its objects is blocked. AWS is very serious about preventing accidental leaks caused by poorly configured S3 buckets, so they lock down everything by default and make it quite hard to turn it back on. In a previous post, I discussed public access to a bucket: How to easily browse Amazon S3 Buckets.

So, to enable static website hosting, first, you need to disable blocking all public access settings.

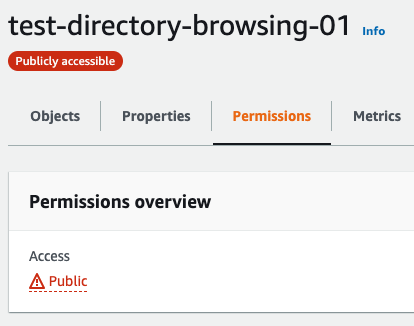

To do this, switch to the Permissions tab on your bucket page.

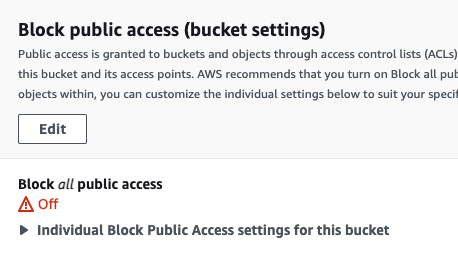

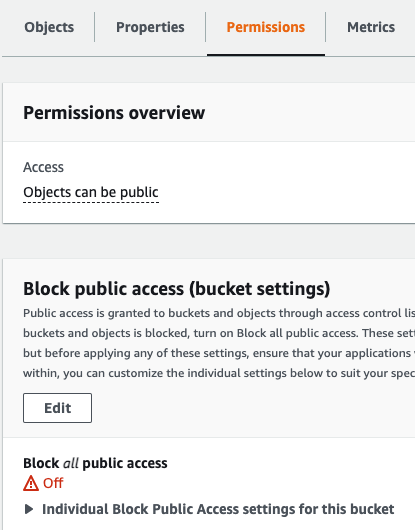

You should see in the overview section that it says “Bucket and objects not public”, and Block public access (bucket settings) is on.

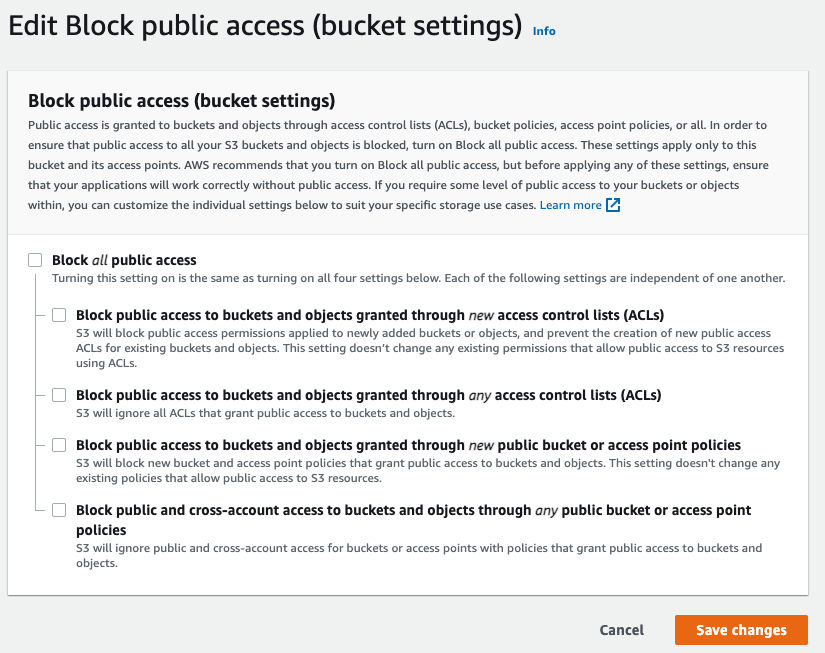

Click the Edit button in the Block public access (bucket settings) section.

Uncheck Block all public access and click the Save changes button.

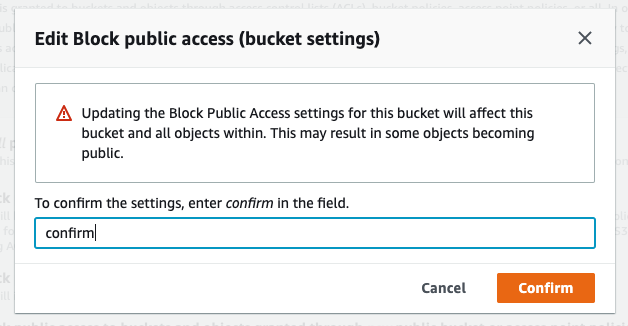

In the confirmation dialog box, type confirm and click the Confirm button.

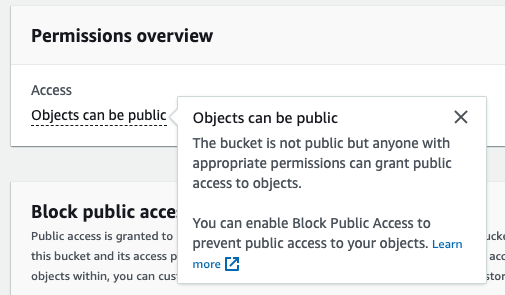

Now you should see the “Objects can be public” message in the permissions overview:

Step 9: Add a bucket policy

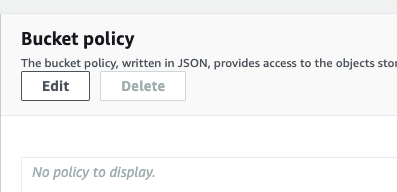

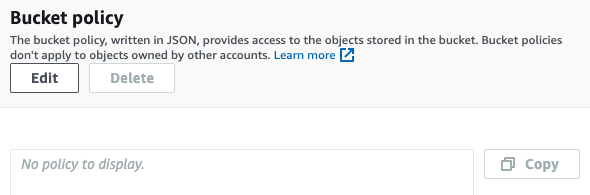

In Step 8, you enabled the possibility of making objects public, but they are still private by default.

To make the bucket contents public while still on the Permissions tab, scroll down to the Bucket policy section and click the Edit button.

In the policy text area, paste the following policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::{Your Bucket Name}/*"

]

}

]

}

Replace {Your Bucket Name} with the actual name of your bucket.

Then, scroll down and click the Save changes button.

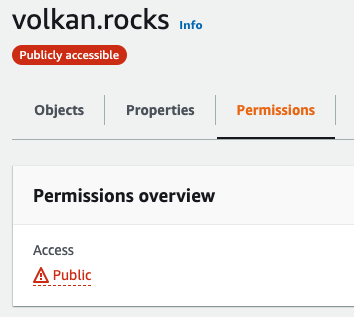

You will be redirected to the permissions page, and in the overview, you should see the objects in your bucket are now public:

Step 10: Test your website

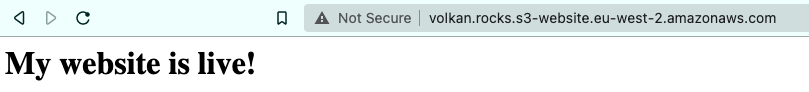

Finally, you get to test your website. Go to the URL that you tested on Step 5 again, and this time, you should see that your website is up and running on the Internet:

The URL is a bit ugly, but now you have a working website. Now let’s move on to the next section, where you beautify the URL by using your own domain.

Part 2: Use your own domain for your website

It is generally hard to write a tutorial that involves domains and DNS settings because there are so many registrars out there, and it is impossible to cover them in detail. In this tutorial, I will demonstrate using Amazon’s own DNS service Route 53. This section will assume you registered your domain via Route 53 and access to the Route 53 console.

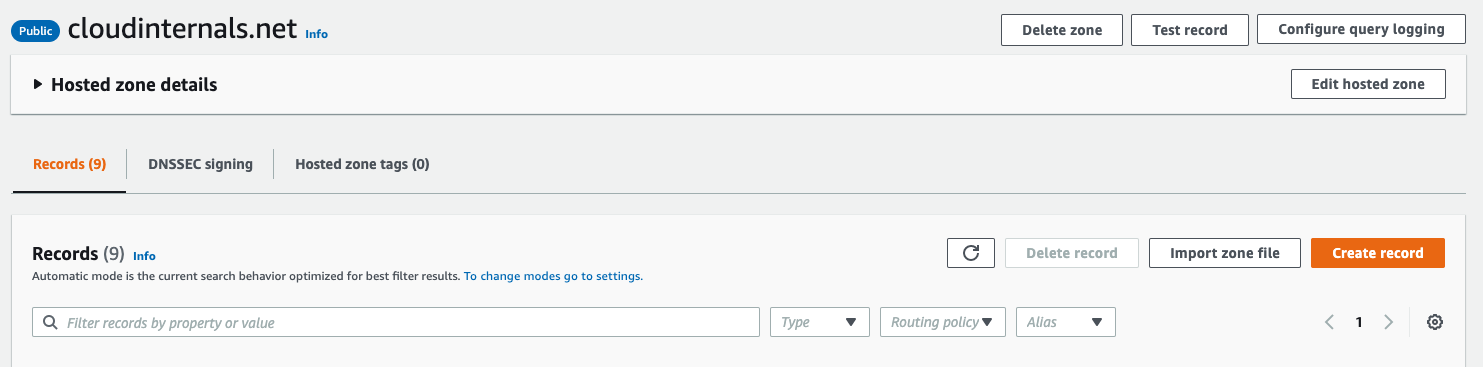

Step 1: Create a hosted zone

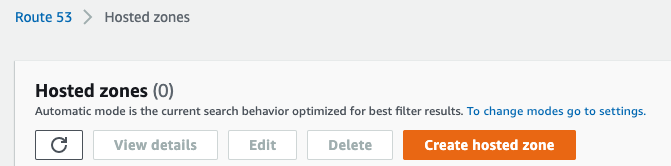

Go to Hosted Zones page and click the Create hosted zone button.

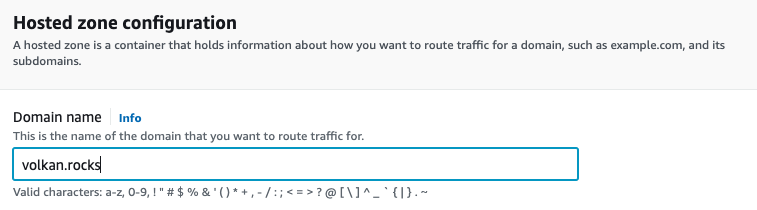

Step 2: Enter your domain’s name, scroll down and click the Create hosted zone button.

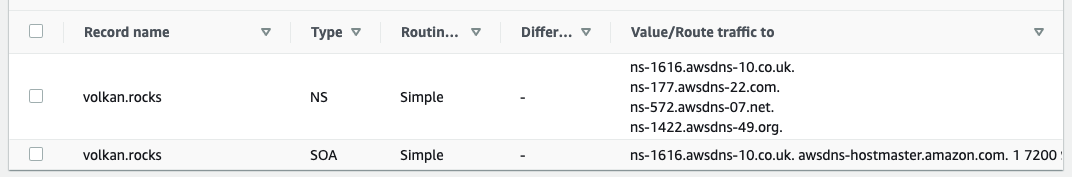

When a hosted zone is created, Route 53 allocates some nameservers for your domain. Note the names of the nameservers which you will need in the next step.

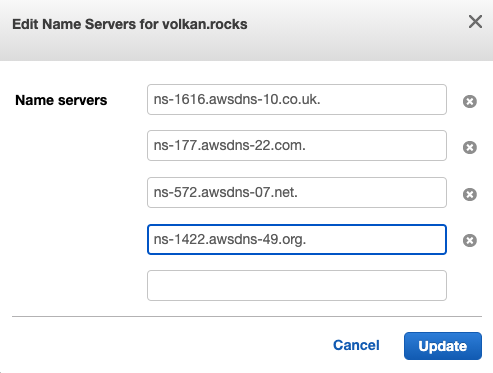

Step 3: Go to the Registered domains page and replace the nameservers with the ones from your hosted zone.

Click the Add or edit nameservers link under the current name server list. It should open a dialog box. Replace the values with the ones you copied from the hosted zone:

You will receive an email once the update request has been carried out by AWS. It generally takes less than a minute.

Step 4: Add an A record to point to your S3 bucket

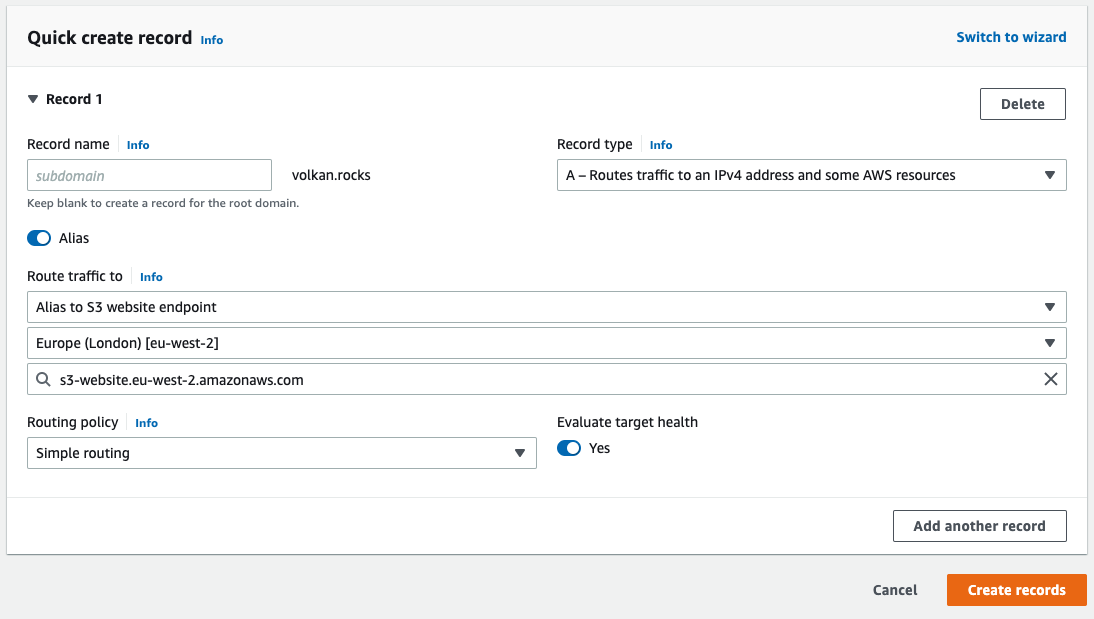

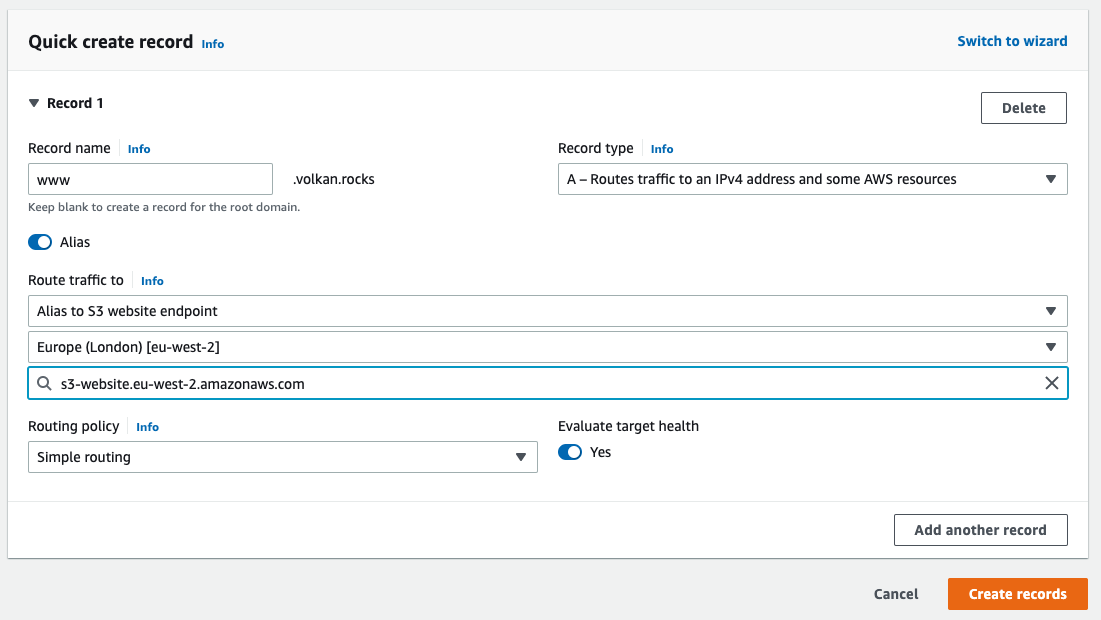

Since Route 53 and S3 are both AWS services, integrating each other is very easy. In hosted zone, click the Create record button. Then, select A - Routes traffic to an IPv4 address and some AWS resources as the record type.

Check the Alias radio button.

In the Route traffic to section, select Alias to S3 website endpoint in the first dropdown.

In the next dropdown, select the region of your bucket.

Finally, in the third dropdown, select the endpoint of your bucket.

So, your settings should look like this:

When you are all set, click the Create records button.

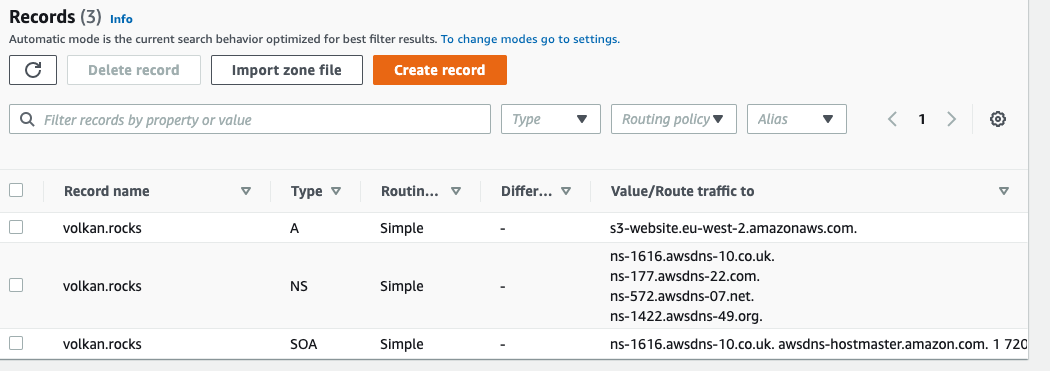

You should now see the A record in your DNS records:

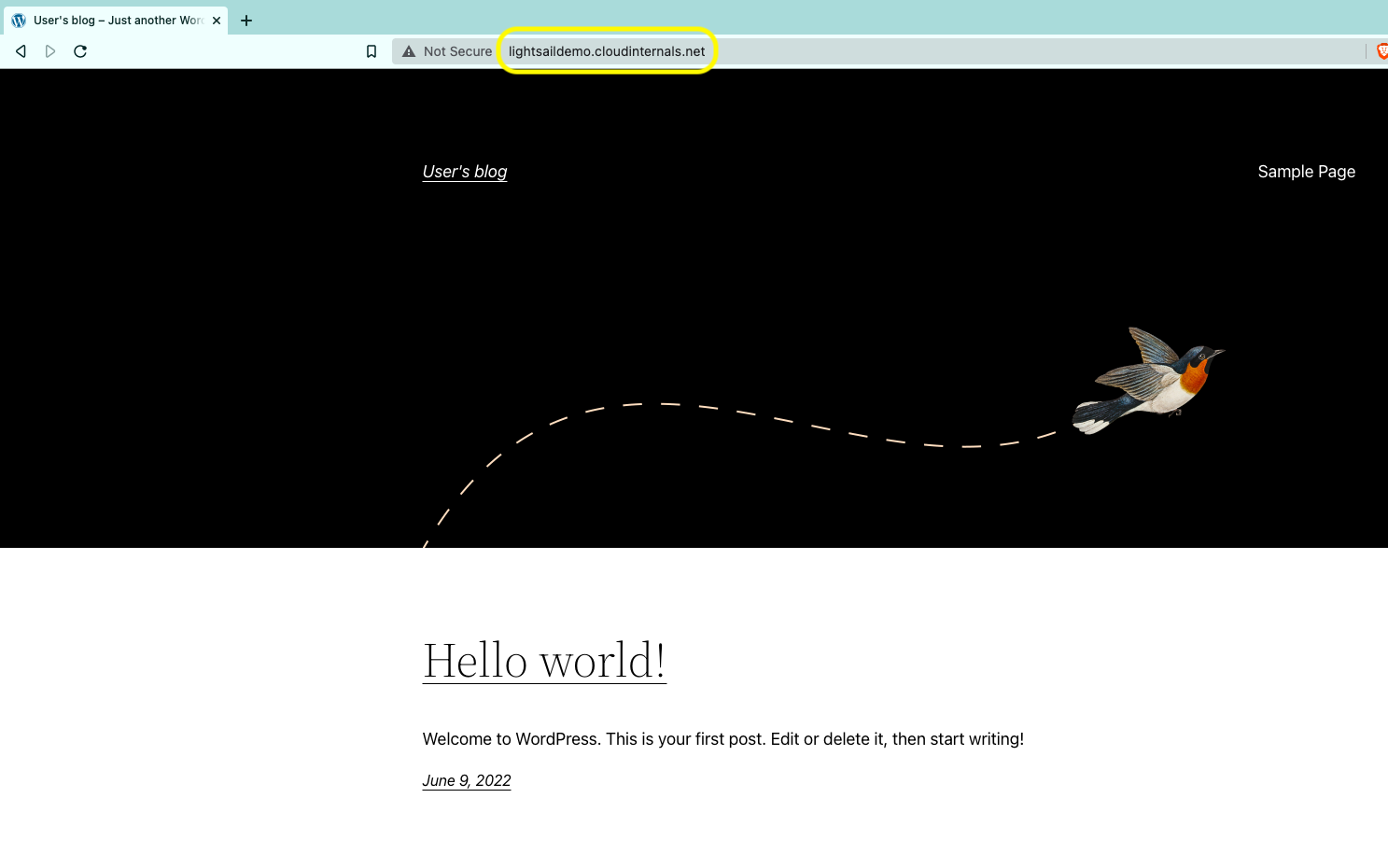

Step 5: Test!

Now visit your domain, and you should see your website running on your custom domain:

Even though the website is now up and running, it’s generally considered to be a good practice to create a redirect for the www subdomain. www subdomain is a thing of the past, but most people think that’s the only way to visit a website, so for backwards compatibility, let’s go ahead and implement it.

Step 1: Create a new bucket named www.{YOUR DOMAIN}

Scroll down and click the Create bucket button.

You should see the new bucket in your list:

Click on the bucket’s name to view the bucket settings.

Step 2: Switch to Properties tab and update scroll down to the Static website hosting section

This step is very similar to what you’ve done in the previous part. In the Static website hosting section, click the Edit button.

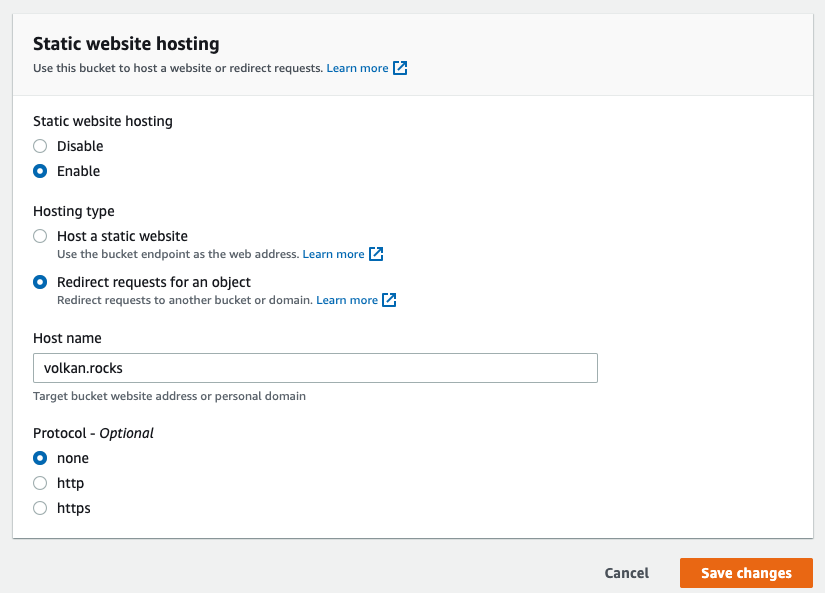

Step 3: Enable static website hosting

Click the Enable radio button to enable static website hosting.

Up until now, you’ve just repeated what you’ve done previously. Now, the difference comes. Instead of selecting the “Host a static website” option, select Redirect requests for an object option.

Enter your domain name as the host name and click the Save changes button:

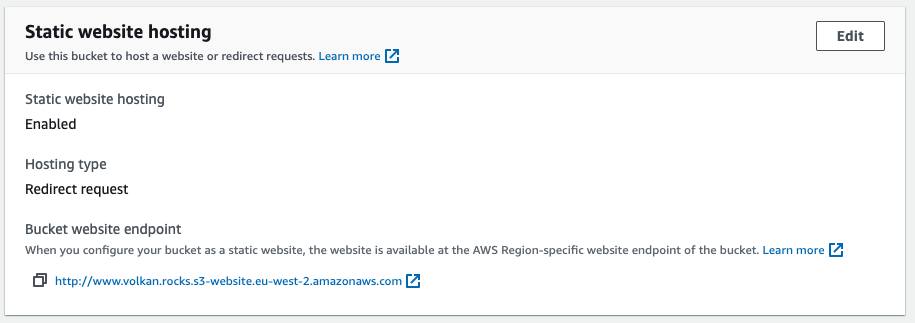

You should now see the static website settings like this:

You should be redirected to your domain if you click on the bucket website endpoint link. The problem is nobody will visit that link anyway. We still need to redirect “www.{YOUR DOMAIN}” to {YOUR DOMAIN}.

To achieve this, you need to add another A record to your DNS records, pointing the www subdomain to the new S3 bucket you’ve created. So your new DNS entry should look like this:

Click the Create records button to save your changes.

Step 4: Test the redirect

Open a new tab in your browser, and go to www.{YOUR DOMAIN} and you should be redirected to your root domain. It might take a few minutes for the DNS to propagate, so it might be a good time for a coffee break if you do not see the results immediately.

Part 3: Amazon CloudFront & Amazon Certificate Manager (ACM)

So far, you have implemented a static website running at your domain and a redirection for the www subdomain. What’s missing is HTTPS support. Unfortunately, Amazon S3 websites don’t support HTTPS. If you want to serve your site over HTTPS, you will need to create a CloudFront distribution.

What is CloudFront?

Before going forward with the implementation, let’s take a break and look into what CloudFront is and what it provides.

Amazon CloudFront is a Content Delivery Network (CDN) service. A CDN is a collection of servers spread out geographically. As the Internet is global, normally, you would receive traffic from all around the globe. If your site is hosted in the US, for example, a user from Europe will need to make a lot of hops to access your content. By using CDN, you can have your content cached in various locations all around the world. So that users pull the content from locations that are closer to them. With this approach, you can reduce latency and cut server costs. In this example, one added benefit will be the ability to generate an SSL/TLS certificate for your domain.

Now, let’s crack on with the implementation:

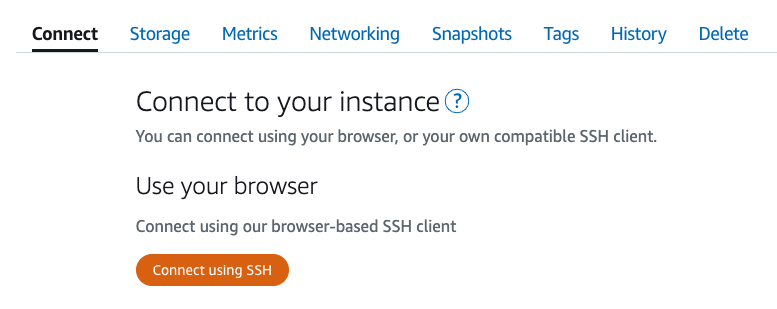

Step 1: Go to the Amazon CloudFront dashboard and click the Create a CloudFront distribution button.

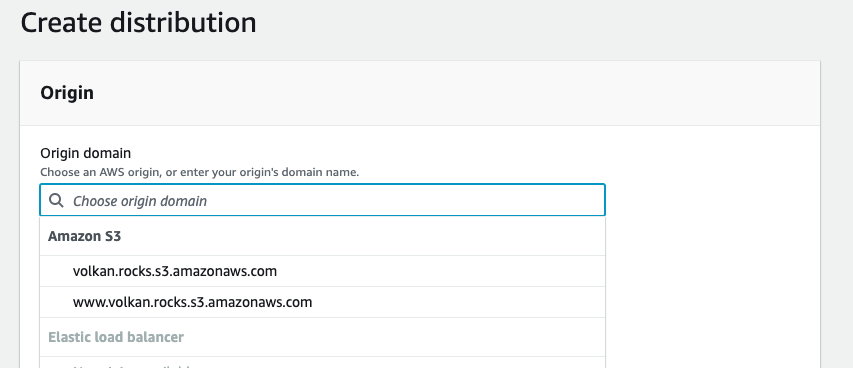

Step 2: Setup origin

Click inside the Origin domain textbox, and you should see the S3 buckets you’ve created in the previous sections:

Select your domain’s bucket (without the www).

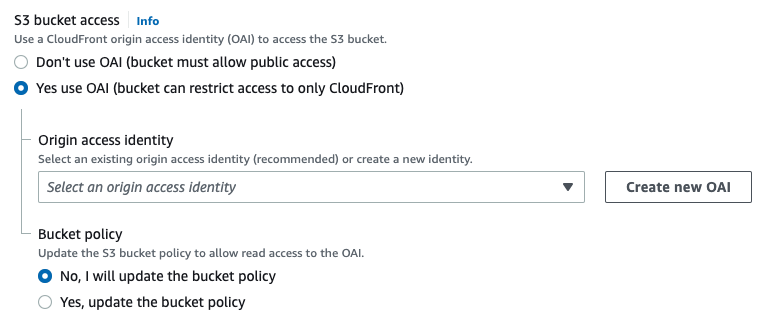

Step 3: Set up Origin Access Identity (OAI)

As you recall, currently, your bucket has public read access to serve its content. When you use a CDN, you wouldn’t want people going straight to your bucket to pull the content. It’s a good practice to restrict this access only to CloudFront to have better performance and more control over the distribution.

So, select Yes use OAI (bucket can restrict access to only CloudFront) radio button.

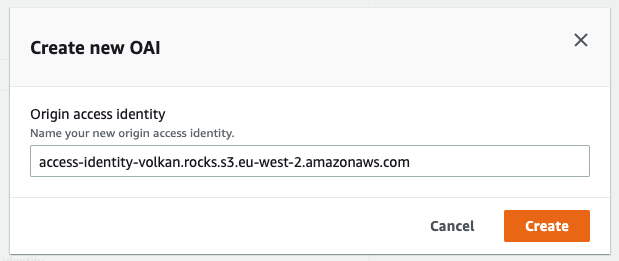

Currently, there is OAI to select, so click the Create new OAI button.

Leave the default name and click the Create button.

Also, select “Yes, update the bucket policy” option. You attached a bucket policy to allow public access in the previous section. By selecting “Yes, update the bucket policy”, you’re telling AWS to replace that bucket policy with a restricted one that only allows CloudFront to access the bucket contents.

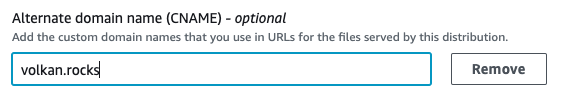

Step 4: Set alternate domain name

Add your domain name as an alternate domain name.

This is important because otherwise your distribution will not be listed in Route 53

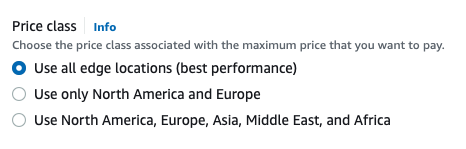

Step 5: Select price class

Next, scroll down until you see the Price class section.

Price class decision is more a financial decision rather than a technical one. You can leave the default, which is “Use all edge locations (best performance)”. As the label says, this option provides the best performance. What the label doesn’t say is it also costs the most. So before you make a decision, I’d recommend you take a look at this document: Choosing the price class for a CloudFront distribution.

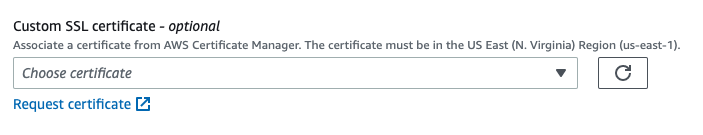

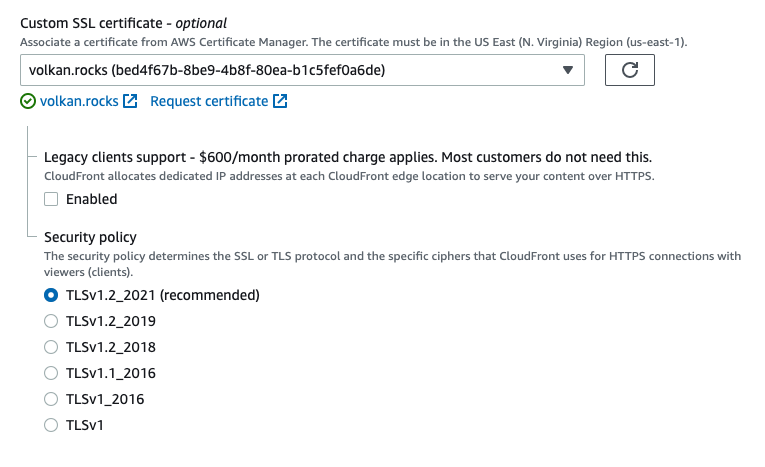

Step 6: Request a Custom SSL certificate

Next up is an important setting: The SSL certificate.

Scroll down to the Custom SSL certificate section:

There is no certificate to select from currently, so click the Request certificate link, which should open a new tab.

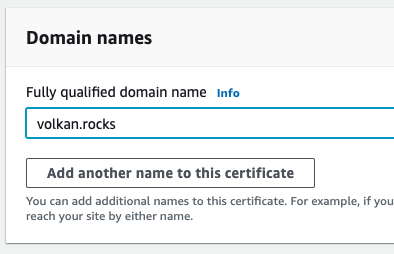

First, enter the domains that the certificate will cover. You can request a wildcard domain as well. In this example, you will use the root domain only because that’s all you need for the time being.

So, enter the name of your domain in the fully qualified domain name textbox:

Leave the validation method as DNS validation and click the Request button at the bottom.

Step 7: Create the SSL certificate

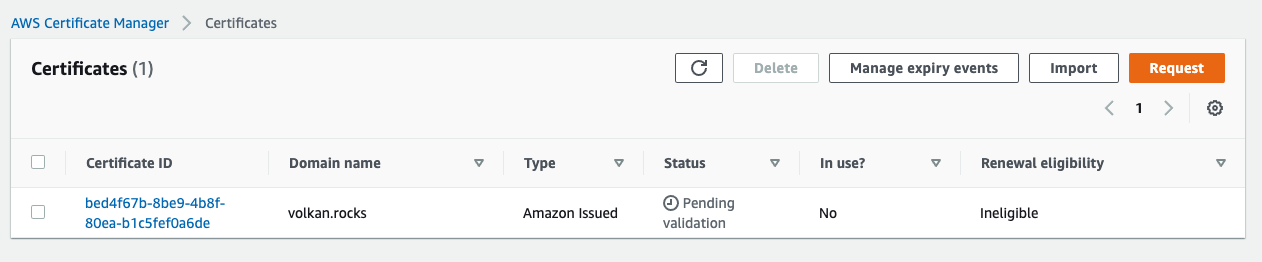

You will be redirected to the certificates page after you’ve requested your certificate. Refresh the page to see your certificate.

Click on the Certificate ID link.

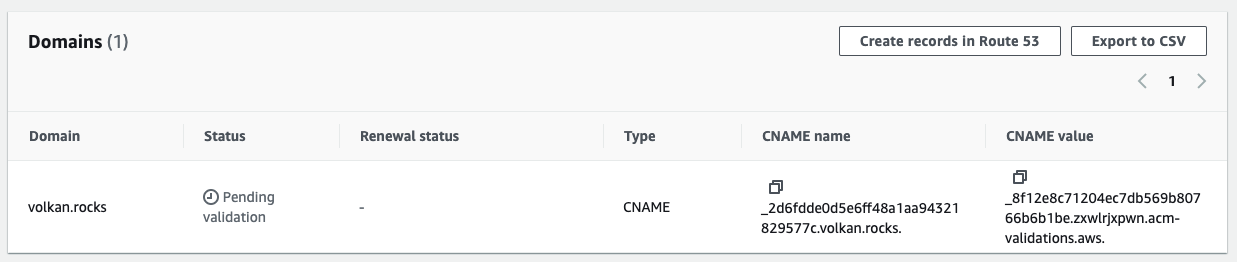

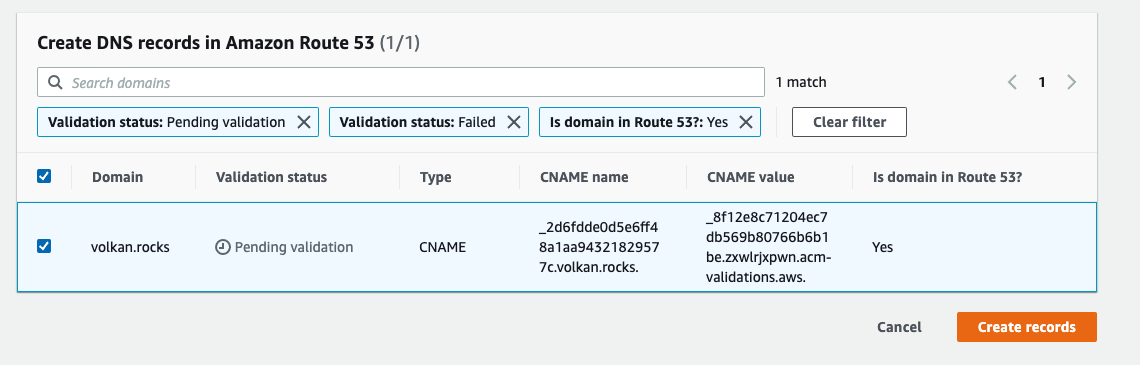

In the Domains section, click the Create records in Route 53 button.

On the next page, click the Create records button.

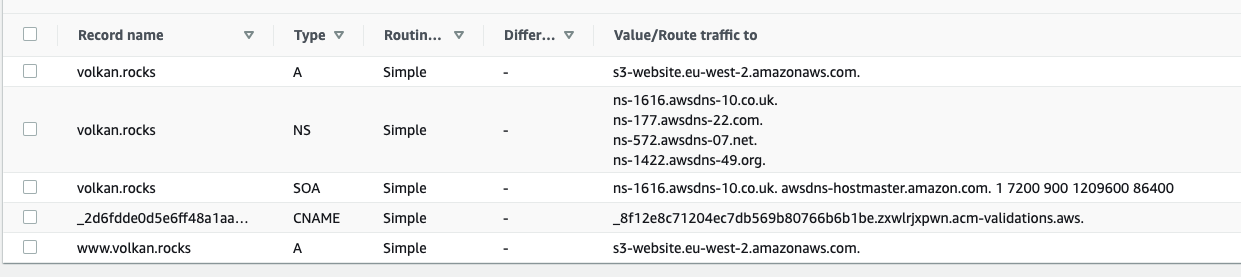

This will create the DNS record in your hosted zone. You can verify it by checking your hosted zone:

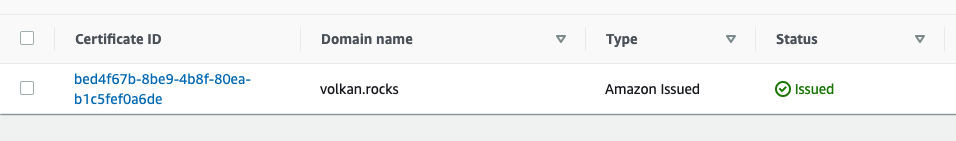

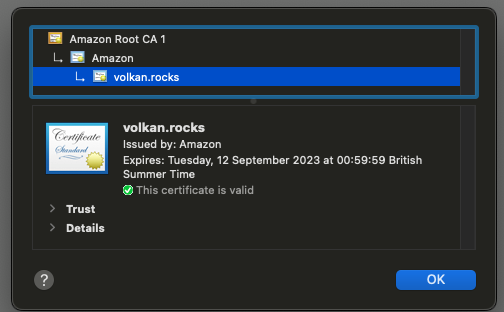

Wait a few minutes for the DNS propagation to take place, and you should see your certificate’s status has changed to Issued:

This is a great benefit of using AWS services together. With just a few button clicks, you were able to generate and validate an SSL certificate for your domain.

Step 8: Use the SSL certificate in CloudFront

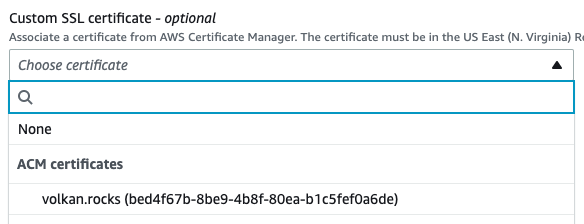

Now go back to the previous tab where you were setting up CloudFront. Click the refresh icon next to the certificate dropdown list:

You should now see your newly issued SSL certificate in the dropdown list. Select the listed certificate and leave the defaults:

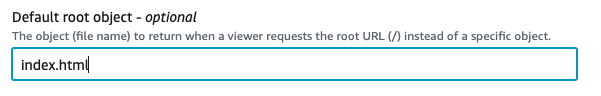

Step 9: Update the root document

Next, update the root document to match your website’s root document, which is index.html in this example.

Step 10: Create the distribution

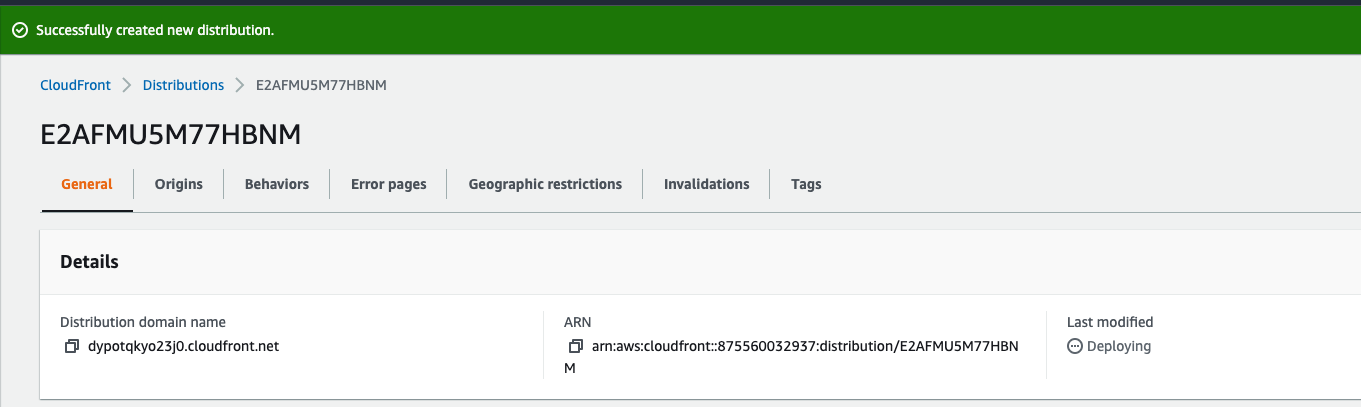

Scroll down to the bottom and click the Create distribution button.

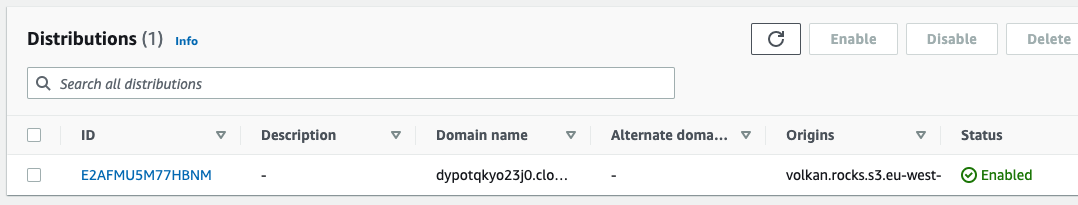

You should be redirected to your distribution page and see it’s started to be deployed.

The deployment process may take a while depending on your selected price class. Wait a few more minutes for the deployment to finish. You should see its status as Enabled once the deployment has finished.

Step 11: Update your DNS to point to the CloudFront distribution

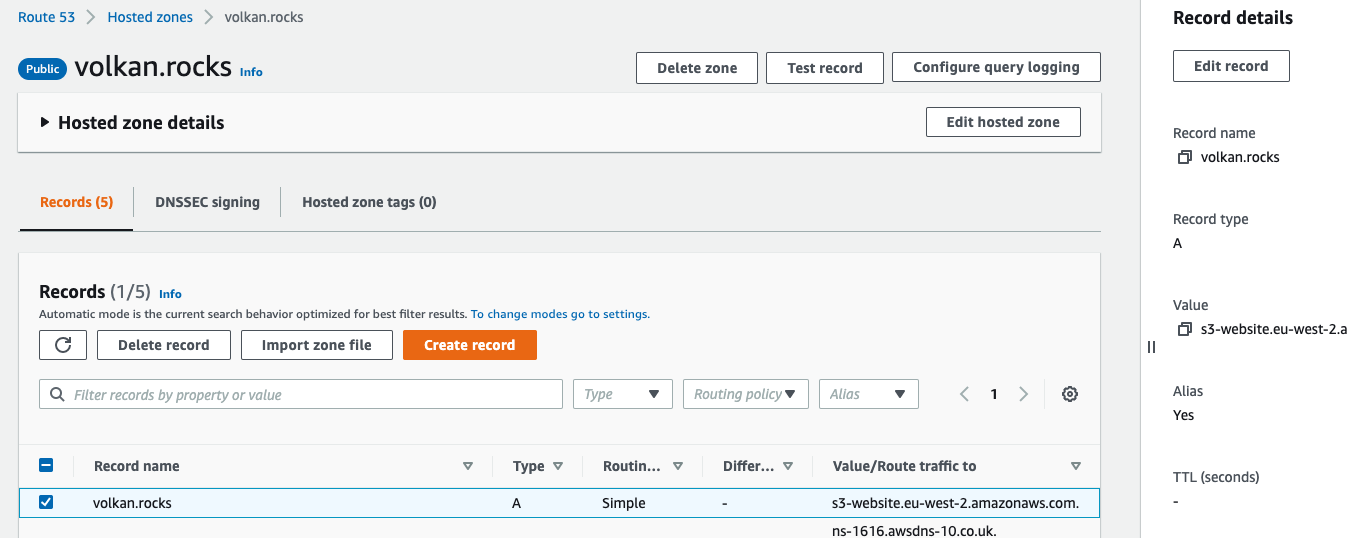

The final step is pointing your domain to your CloudFront distribution. To achieve this, open your Route 53 hosted zone settings.

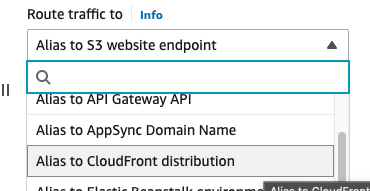

Select the A record for your root domain and click Edit record on the right pane:

This time select Alias to CloudFront distribution in the Route traffic to dropdown list:

Your distribution should be listed in the dropdown:

Click the Save button.

Step 12: Test HTTPS

Now, it’s time for the final test. Open a new tab in your browser and enter the following URL: https://{YOUR DOMAIN}.

You should see your site is running over HTTPS:

The great thing about this certificate is that it will be renewed automatically by Amazon. You can read more on that here: Managed renewal for ACM certificates.

Conclusion

This has been a long tutorial, but I hope you enjoyed it. It was meant to be a step-by-step tutorial so that you could follow along. AWS has lots of services, and they work nicely with each other. By using only AWS services, you are now able to run your own website over HTTPS in a serverless environment.