Deep Dive into AWS Lambda Public URLs

By enabling Function URLs, you can now access Lambda Public URLs.

Lambda is AWS’s Function-as-a-Service(FaaS) service that gives us a serverless and event-driven compute service. Lambda functions can play an essential role in a microservice architecture. However, it had one significant limitation until recently: You couldn’t invoke a Lambda function as an API endpoint on its own. It was only possible using other services such as Amazon API Gateway. With the recent announcement, we can now create our APIs by only using the AWS Lambda service. Let’s take a look.

Part 1: Basics

Create a New Lambda Function

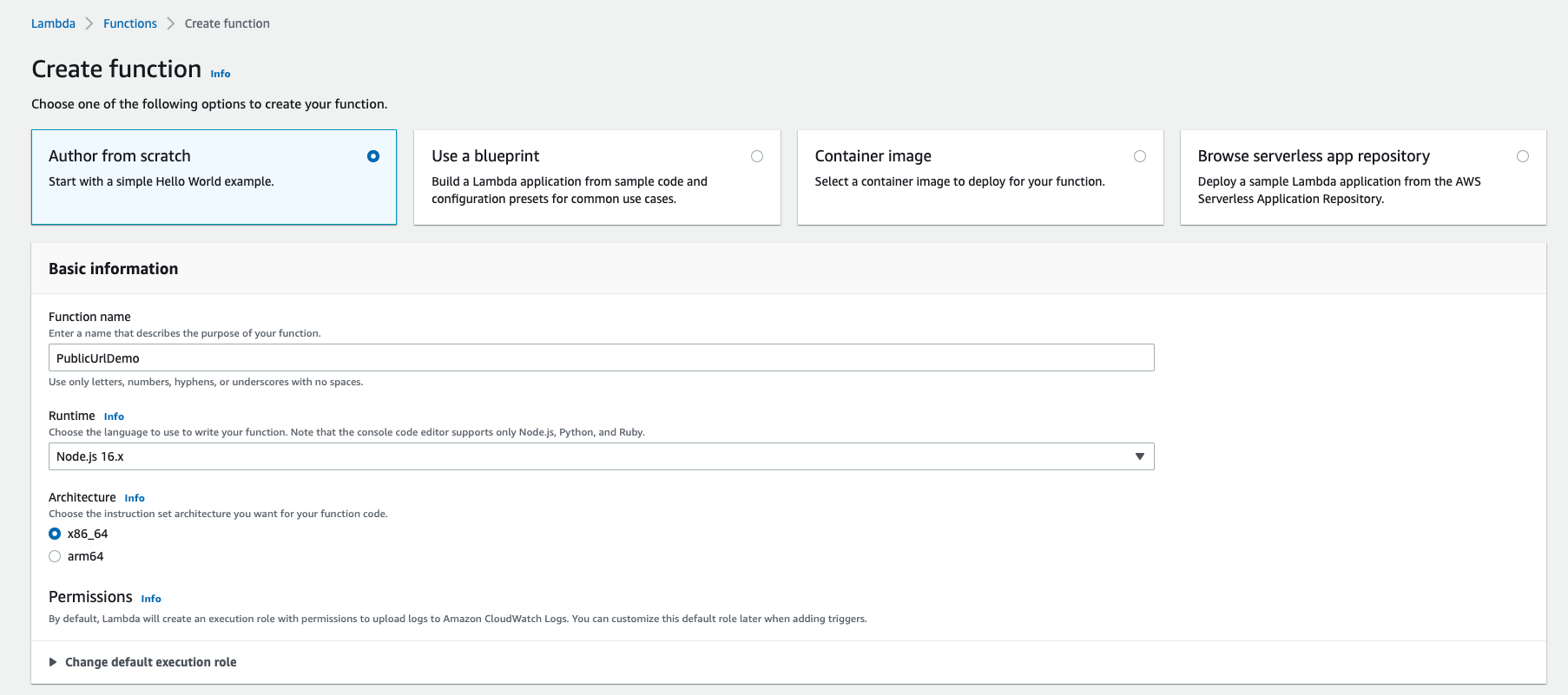

Let’s go ahead and create ourselves a simple Lambda function using AWS Management Console:

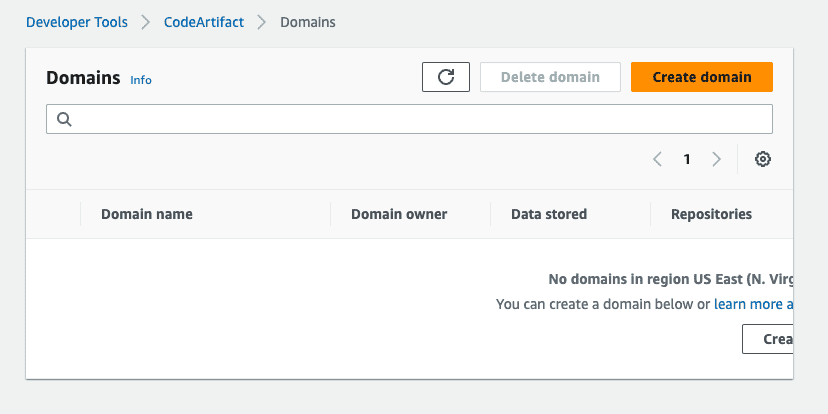

- Go to Lambda Dashboard (In this example, I’m using the us-east-1 region. If you prefer another one, switch to that region in the console)

- Click Functions on the left pane and then Create function button.

- Keep the defaults in the Basic information section.

You can also expand Advanced settings and tick the Enable function URL option but we are going to do it later in this post.

- Click Create function

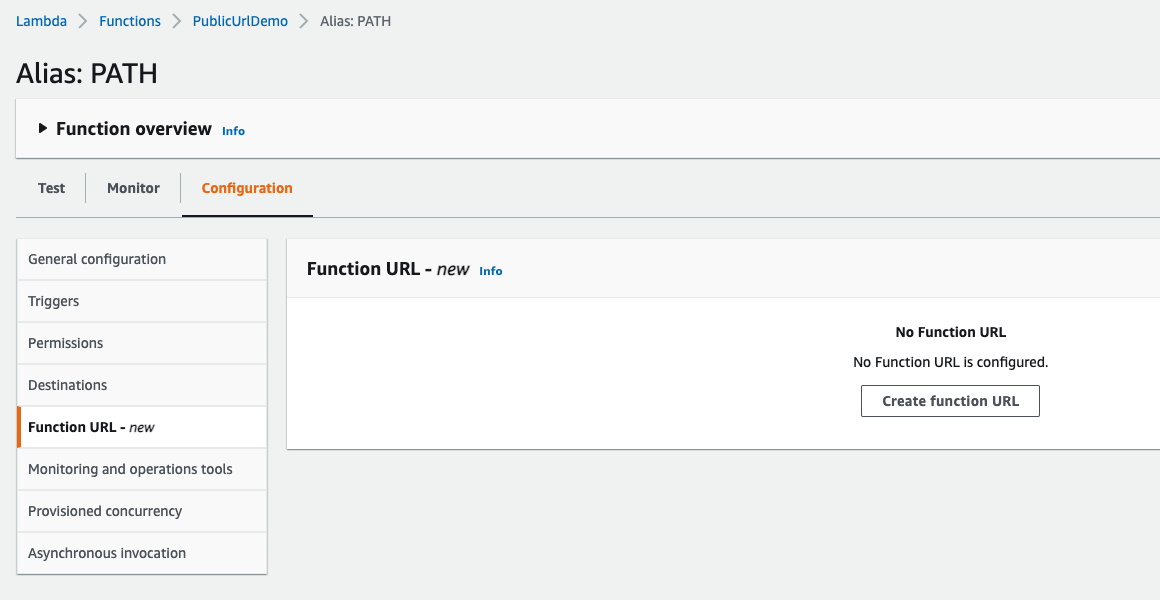

Enable Function URL

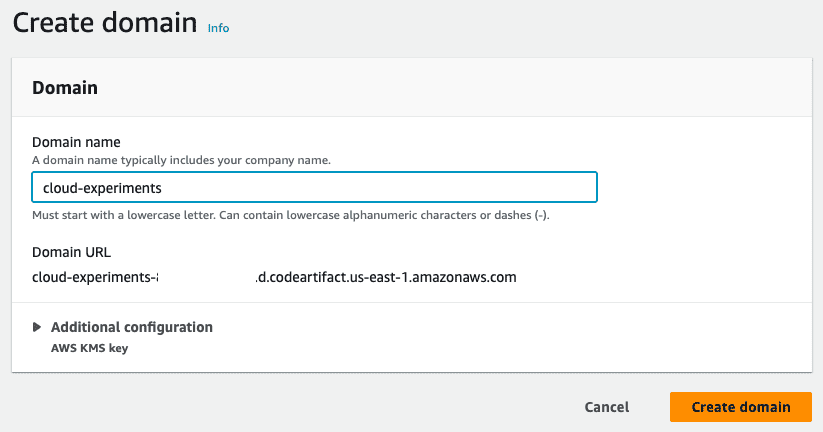

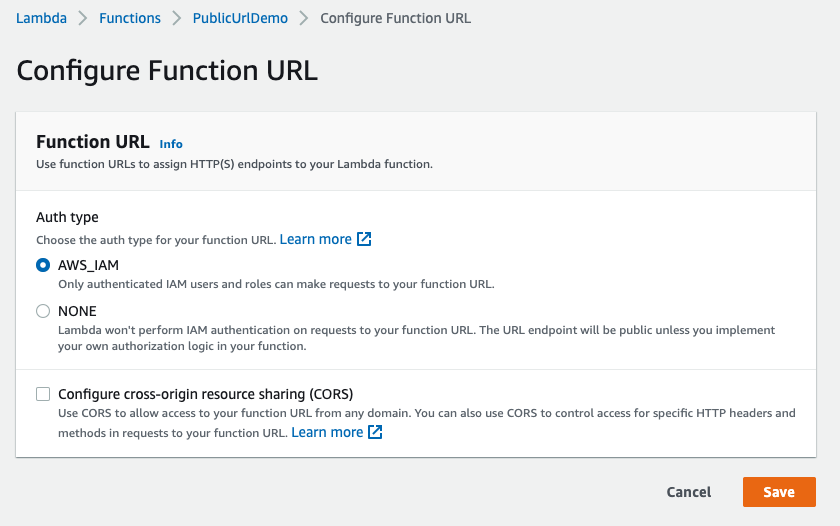

Now that we have a function available go to the Configuration tab and click on the Function URL. Then click Create function URL button. We’ve now come to the screen that was presented to us in the Advanced settings when we were creating the function:

We are only trying to see a Lambda function we can call from the outside at this step. So, to keep things simple, let’s choose NONE as Auth type.

Click Save to update the settings.

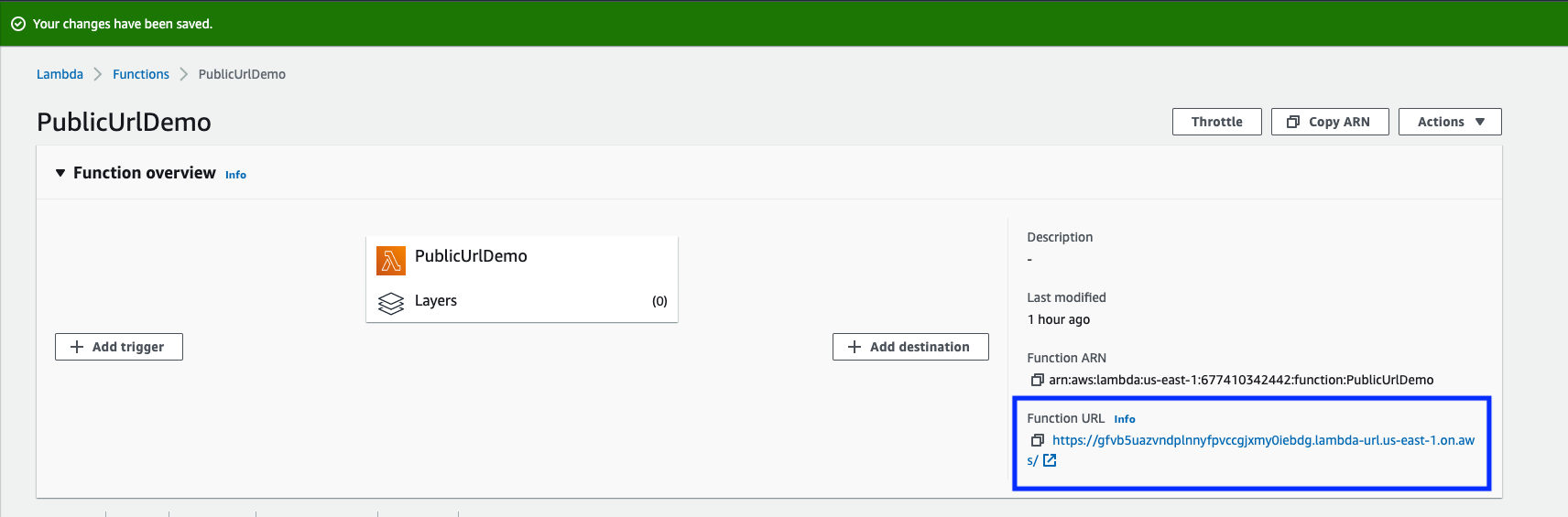

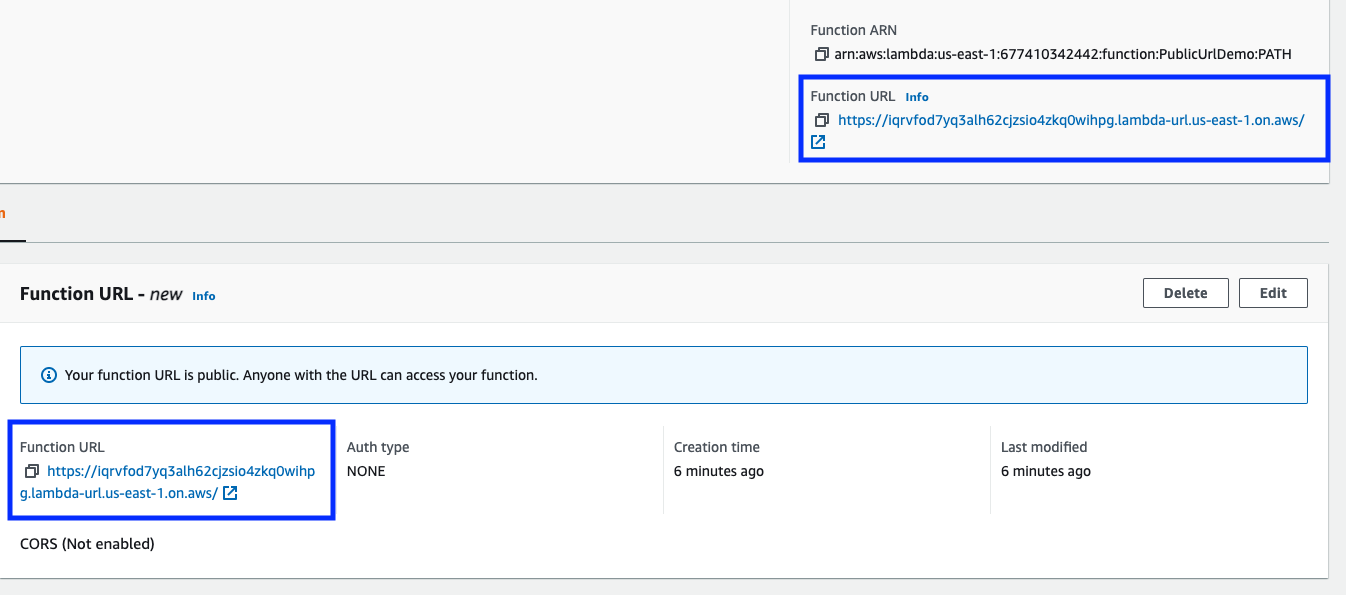

Now we are back on the Function overview page, and we can see our newly generated URL:

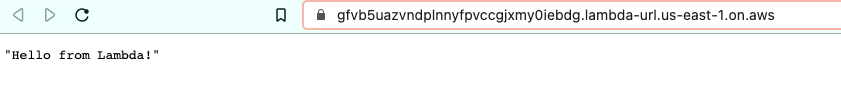

and click on that link to see the URL in action:

Part 2: Advanced Topics

Now that we have a working publicly available API let’s dig deeper into authentication, CORS, custom domains and how this feature compares to API Gateway.

Authentication

In Part 1, we briefly saw there are two authentication options:

- AWS_IAM

- NONE

Auth type: NONE

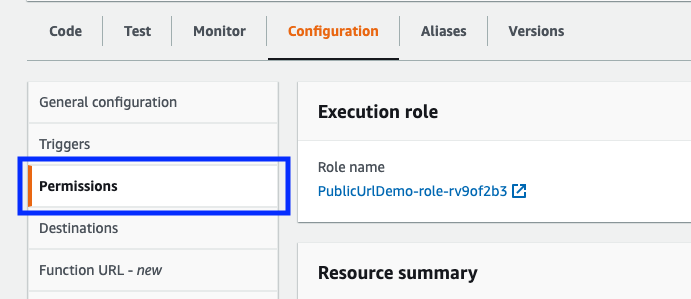

We chose NONE to keep things simple. However, even though we selected NONE, AWS still created a policy for us and added to functions permissions. We can view the created the policy in Permissions section:

First, click Permissions on the left pane.

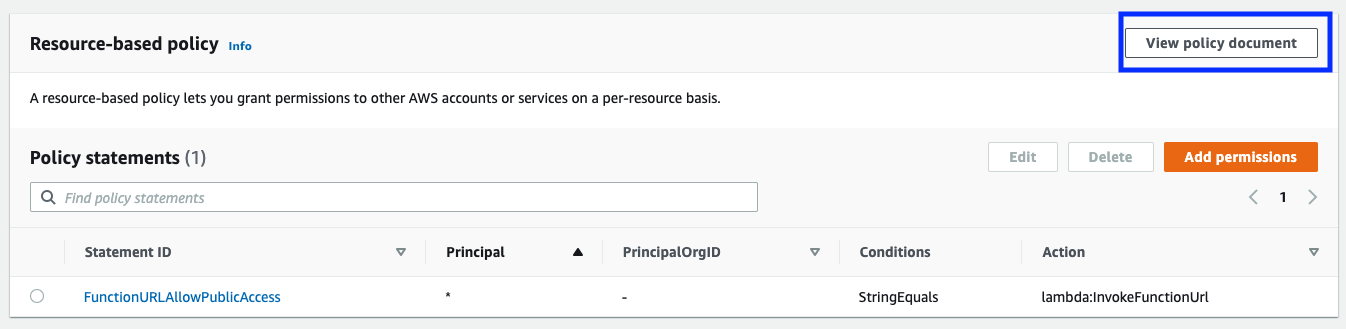

Then scroll down on the right to the Resource-based policy section and click View policy document:

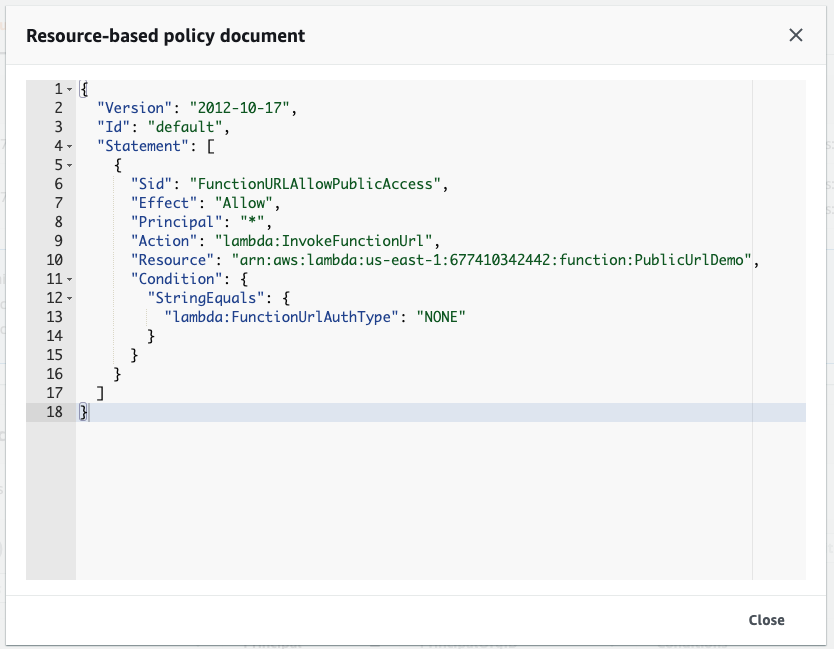

and you can see the policy document that allows everyone (“Principal”: “*”) to invoke the function URL (“Action”: “lambda:InvokeFunctionUrl”)

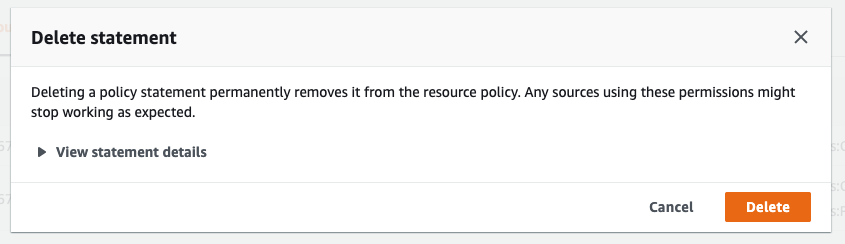

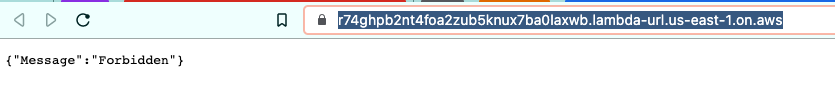

Without this permission in place, we wouldn’t be able the invoke the function. To test that, we can delete the policy statement and try the URL again:

If you scroll up and call the URL again, you will get a Forbidden (HTTP 403) error.

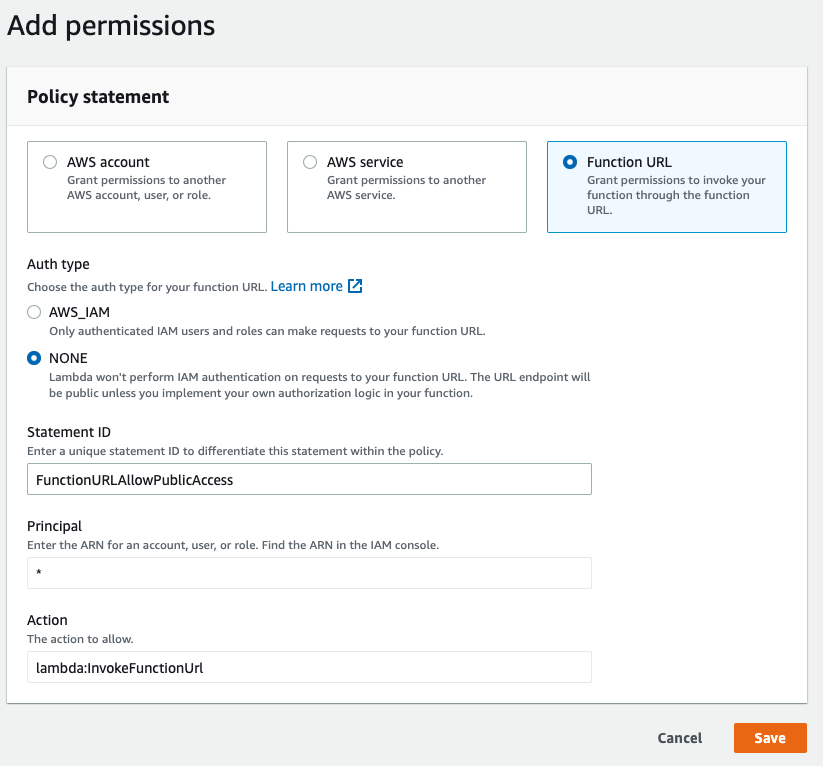

It’s easy to put that permission back in. Click on Add permissions. Select Function URL. Leave the defaults (Auth type: NONE, Statement ID: FunctionURLAllowPublicAccess, Principal: *, Action: lambda:InvokeFunctionUrl) and click Save.

And if you click on the URL, you should see the “Hello from Lambda!” message again.

Auth type: AWS_IAM

Now let’s consider a scenario where we don’t want our API publicly available. For example, we may choose to grant access to a specific user. This might be useful for testing a beta version internally before making it public.

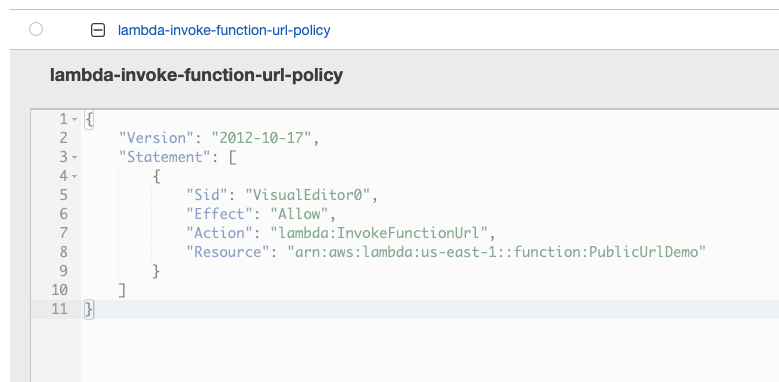

In this example, I’ve created a new policy with lambda:InvokeFunctionUrl permission on our demo Lambda function:

Policy document:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "lambda:InvokeFunctionUrl",

"Resource": "arn:aws:lambda:us-east-1::function:PublicUrlDemo"

}

]

}

Then, I created a user with this policy attached:

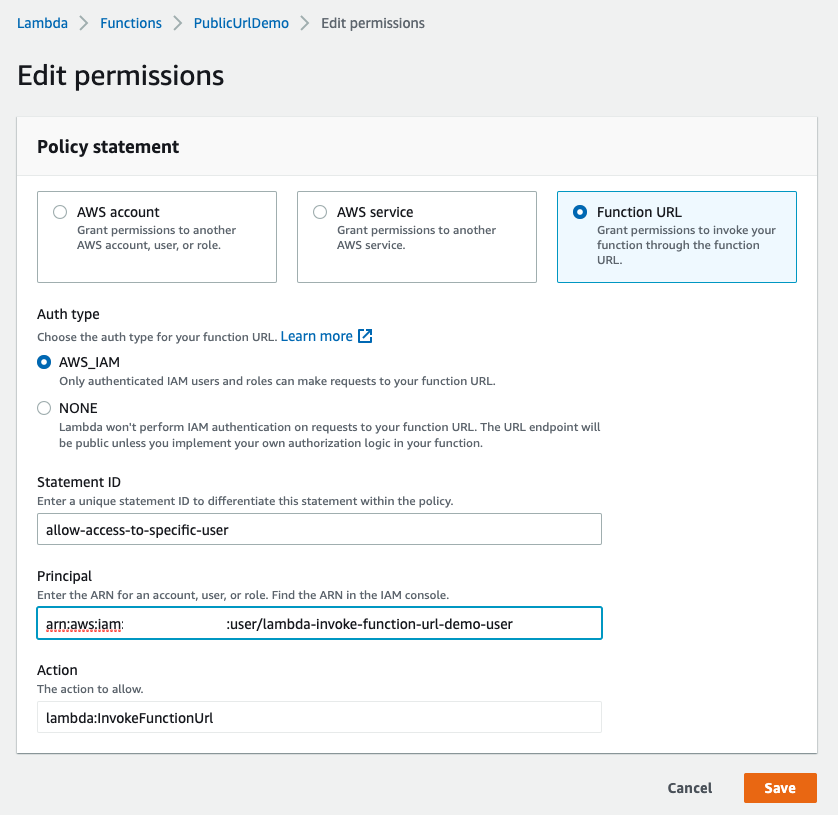

Finally, I went back to the Lambda function permissions and updated auth type allowing access only to this user:

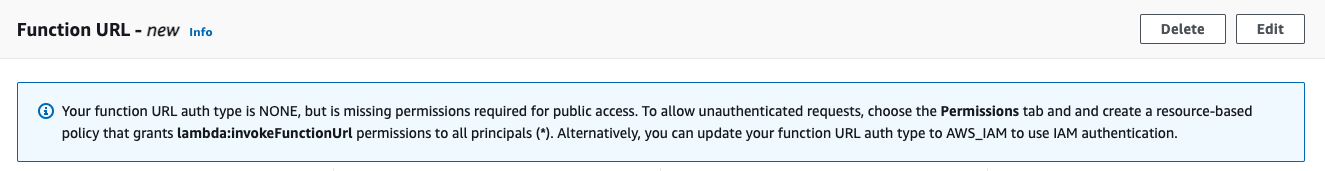

Even though we changed the auth type to AWS_IAM in permissions, we still need to ensure the auth type is set to AWS_IAM in Function URL settings. If there is a mismatch, the console will give us a warning:

If we click on the URL again, we get a Forbidden error.

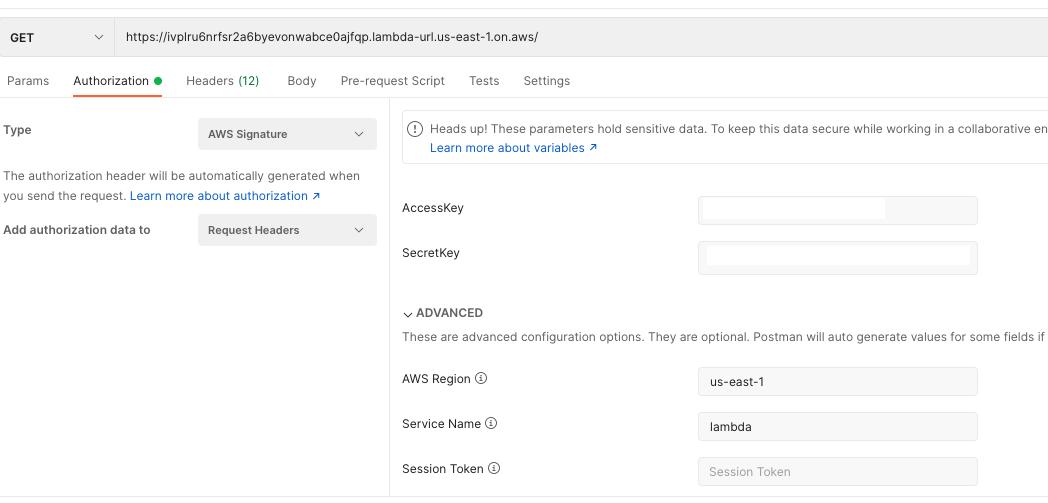

To make it work, we need to sign the request with our new IAM credentials (as in the access key and secret key we noted down when we created the user). In this example, I used Postman to enter the credentials and sign the message:

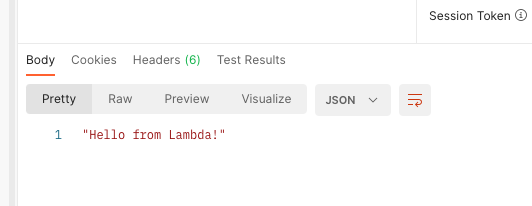

and we can get our response successfully:

Pricing

In terms of pricing, there is no extra charge for using function URLs. The cost of Lambda executions is calculated the same way whether or not they were invoked from a browser over the public Internet or a CLI inside your company network. The details of pricing can be found here

The duration is calculated as per the below rule:

Duration is calculated from the time your code begins executing until it returns or otherwise terminates, rounded up to the nearest 1 ms*

This means if you implement authentication and receive unauthenticated calls, you don’t pay for those calls, which might be helpful to be charged for DDoS attacks.

HTTP Methods

When you click on the function URL link on AWS Management Console, you send a GET request to the endpoint. I also used the GET verb in the example above. Actually, the endpoint supports all HTTP verbs.

For example, if you run the curl example below and send a DELETE request, you still get the same “Hello from Lambda” response with an HTTP 200 status code.

curl --location --request GET 'https://{ REPLACE WITH YOUR APIs SUBDOMAIN }.lambda-url.us-east-1.on.aws/' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--data-raw ''

I don’t think I would need to support multiple methods in my Lambda function, but it is possible to take different actions based on the HTTP method. The following NodeJS Lambda example demonstrates that:

exports.handler = async (event) => {

let httpMethod = event.requestContext.http.method;

console.log('event:', event);

let responseMessage = '';

switch (httpMethod) {

case 'GET': responseMessage = 'GETting something'; break;

case 'DELETE': responseMessage = 'DELETEing something'; break;

default: responseMessage = 'doing something else';

}

const response = {

statusCode: 200,

body: JSON.stringify(responseMessage),

};

return response;

};

Paths and Query Parameters

Similar to supporting different HTTP methods, we can also access the path and query parameters and do something with them if required.

For example, I modified the code to handle this type of scenario:

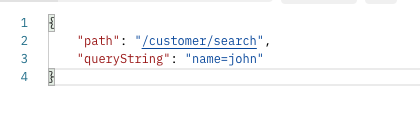

Query string example:

exports.handler = async (event) => {

let requestPath = event.rawPath;

let requestQueryString = event.rawQueryString;

let responseData = {

path: requestPath,

queryString: requestQueryString

};

const response = {

statusCode: 200,

body: JSON.stringify(responseData),

};

return response;

};

Command to run:

curl --location --request GET 'https://{ REPLACE WITH YOUR APIs SUBDOMAIN }.lambda-url.{ REPLACE WITH YOUR REGION }.on.aws/customer/search?name=john' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--data-raw ''

Output:

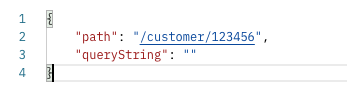

URL path example to get the details of the customer with id 123456:

Command to run:

curl --location --request GET 'https://{ REPLACE WITH YOUR APIs SUBDOMAIN }.lambda-url.{ REPLACE WITH YOUR REGION }.on.aws/customer/123456' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--data-raw ''

Output:

Lambda Function URLs vs Amazon API Gateway

In the introduction section, I mentioned that we used Amazon API Gateway for public APIs before the function URLs feature. So even though the feature helps a lot for specific use cases, I think it’s worth comparing both to have more realistic expectations.

Amazon API Gateway features

- Authentication and authorization

- Request throttling

- Usage plans and quotas

- API Keys

- AWS WAF integration

- Web socket support

- CORS support

- Built-in custom domain support

Function URLs

- AWS_IAM authentication

- CORS support

So we have lots of missing stuff in function URLs. The question is, do we need all of them for all our APIs? The chances are that a single public endpoint is good enough for many use-cases, and it costs a lot less.

Using Function URLs with Aliases

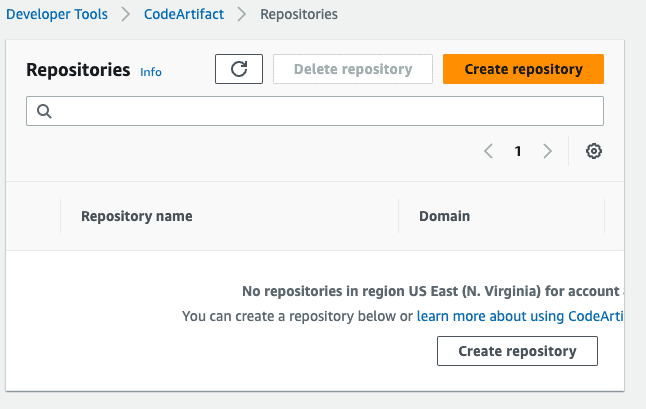

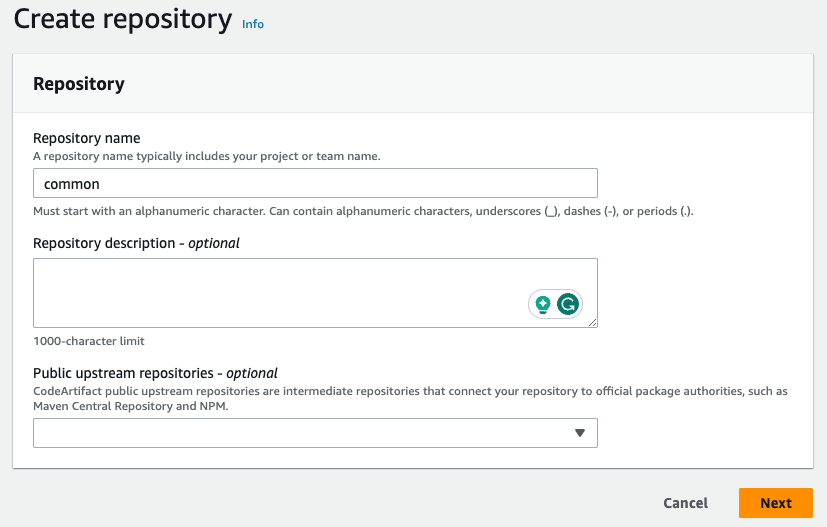

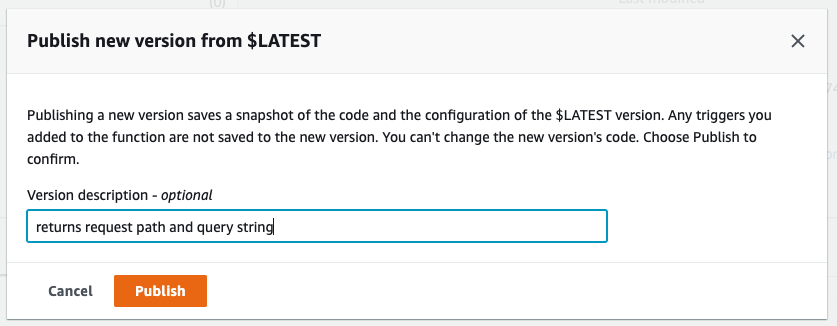

We can also have different URLs for different aliases. To demonstrate this feature, I will publish the code above that returns the path and query string as a new version:

Switch to the Versions tab and click Publish new version.

In the dialog box, give a meaningful description and click Publish

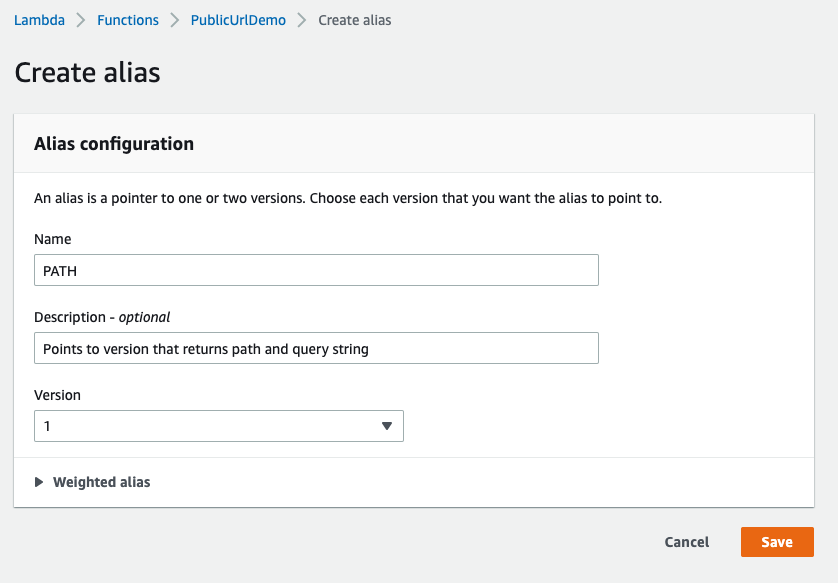

Then switch to the Aliases tab, create an alias, and click Save.

Now you can create a new function URL for this alias. While inside the new alias, click on Create function URL button and follow the same steps you did with the main function (with NONE as authentication type).

If you go to the alias function URL section now, you should be able to see the new URL, which is entirely different from the main function URL (which points to the $LATEST version automatically):

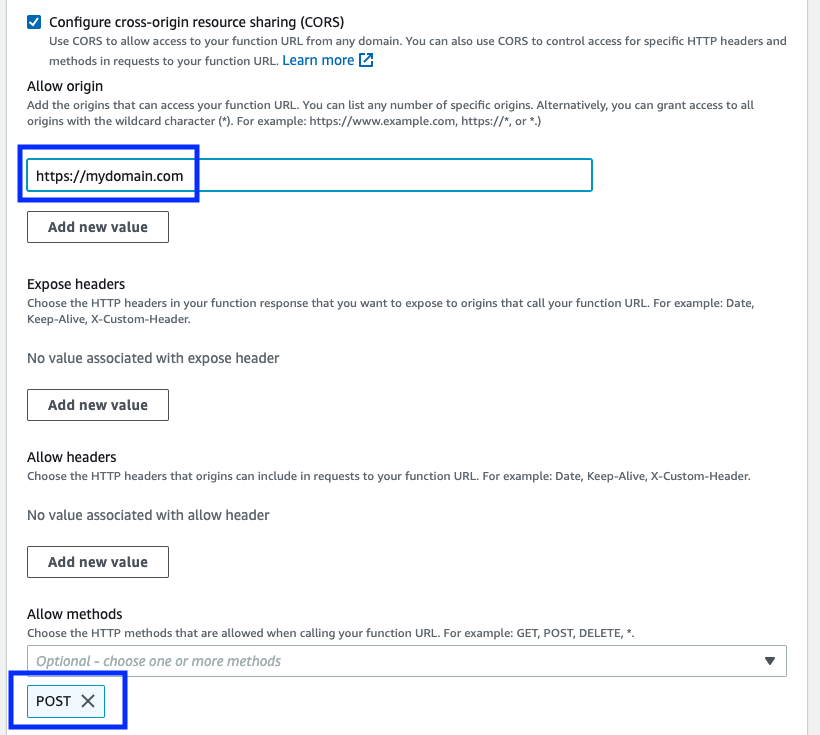

CORS

You can also enable CORS to restrict where your API can be consumed. For example, you can set your domain where your frontend lives and only accept POST requests:

Part 3: Clean Up

If you followed the steps of this post, you might want to clean up the resources you created. You can do so by following the steps below:

- Delete the Lambda function (PublicUrlDemo)

- Delete IAM policy (lambda-invoke-function-url-policy)

- Delete IAM user (lambda-invoke-function-url-demo-user)

Conclusion

I needed a simple API to carry out a simple task on multiple occasions. My go-to service was always Lambda, but the Lambda function could only be called from within AWS services or command line using authenticated users was a deal-breaker in most scenarios. Now that we have the ability to develop APIs that can be invoked from anywhere is a significant improvement on the server overall.